At first glance, MCP vs API might seem like comparing the same concepts, but they serve very different roles.

MCP is a standardized protocol through which LLMs retrieve external context (such as databases or docs) and use tools (for example, search or calendars) in a consistent, machine-friendly way.

But APIs are general-purpose communication protocols between software systems. Below, I’ll write about the key differences, similarities, and how both MCP and APIs can work together.

- What is an MCP?

- What is an API?

- Key Differences Between MCP vs APIs

- How do MCP and APIs Work Together?

- FAQs about MCP vs API

What is an MCP?

Essentially, MCP (Model Context Protocol) is a mid-layer that allows AI systems (e.g., Claude or GPT) to interact with external services like databases, files, APIs, or even real-time applications like calendars or search engines.

Its main goal is to give AI agents access to reliable, structured context so they can generate more accurate and relevant responses.

The MCP architecture has three important components:

- The Host – The AI system that uses MCPs to invoke external tools and data.

- MCP Client – The component within the host infrastructure tasked with making requests for tools or data.

- MCP Server – The layer that executes those requests, typically a gateway to external services like APIs, file systems, or business applications.

In 2026, many Managed Cloud Platforms have evolved into full AI orchestration platforms. These platforms provide not just hosted compute and scaling, but also native support for workflows, agents, retrievers, vector databases, observability, and policy-based governance. MCPs reduce the engineering burden by unifying these services under a consistent control plane.

Now, if you’re reading this, you probably already know what an API is, but just for good measure.

What is an API?

An API is a set of rules and protocols that software programs can use to communicate with each other securely. It defines endpoints (like /users/123 or /weather/today) that allow one system to retrieve some data or call actions upon another.

Now it sounds like they’re the same thing. I mean, both of them enable one system to communicate with another. Not quite. Here are the main differences between API vs MCP that you should know:

APIs in 2026 serve as modular, composable building blocks that power larger systems. Instead of being endpoints that simply return text or predictions, APIs are embedded within agents, event-driven workflows, vector-assisted search (RAG), and real-time decisioning systems that power modern applications.

What are the Key Differences Between MCP vs APIs?

APIs have been the standard for software systems to communicate with each other for years now. They achieve this by transferring information between apps, triggering actions, or integrating external services.

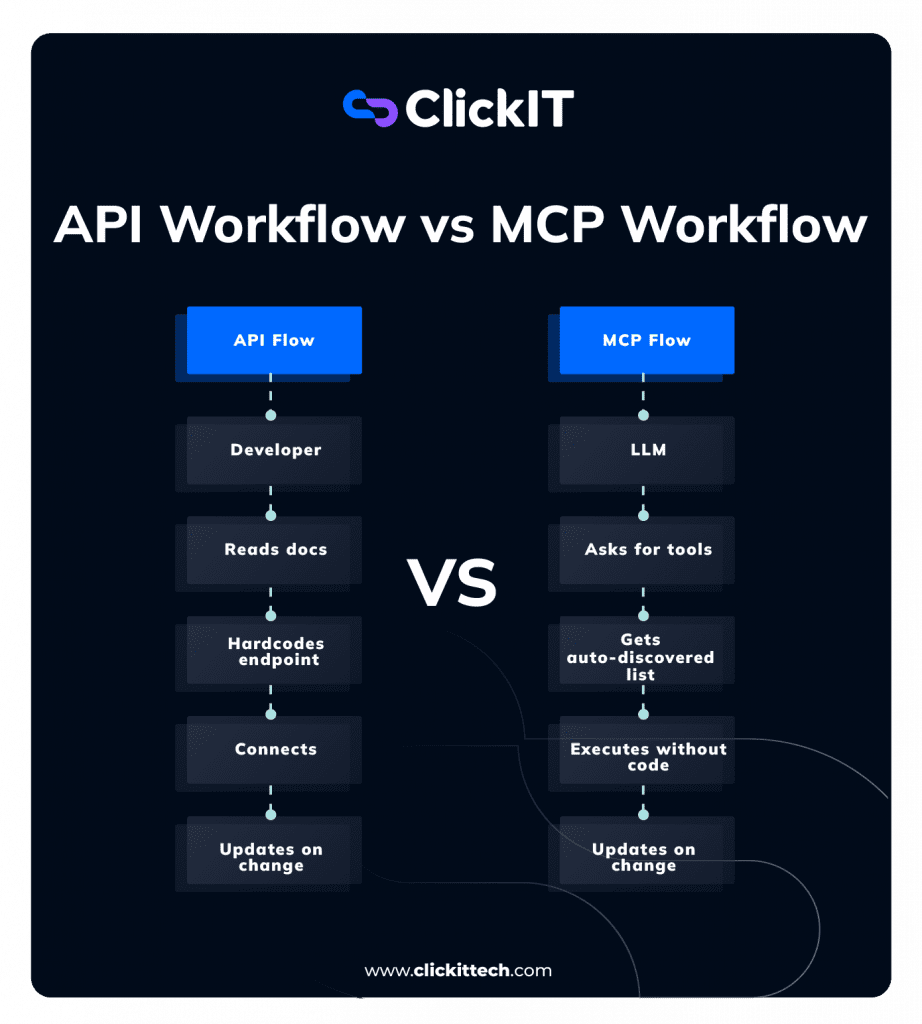

APIs work great when developers manually integrate them, but LLMs need a way to find and use tools dynamically without pre-coded logic. To solve this problem, Anthropic introduced the Model Context Protocol (MCP), made specifically for AI agents. This has led to the MCP vs API debate.

MCP vs API: Purpose and Design

APIs are designed to let software applications communicate with each other. They define how requests are made, what responses look like, and how to authenticate users or systems.

MCPs are purpose-built for LLM-based applications. They’re meant to help AI agents retrieve external context (like database records or documents), use tools (like calendars, search engines, or code execution), and follow a consistent pattern for interaction.

You could say APIs are built for developers and MCPs are designed for machines that reason in natural language. That changes how they’re structured and used.

Read our blog LLM Cost Optimization

MCP vs API: Discovery of Capabilities

MCPs support dynamic discovery. This means an AI agent can ask an MCP server what tools or data are available and get a structured list of functions, resources, and templates. This is important in AI workflows because agents need to adapt based on what’s available without hardcoded logic.

APIs don’t offer this natively. With a REST API, the client must be programmed in advance to know the endpoints, request format, and capabilities.

As a developer, I would have to update the code manually if there are any changes. So, developers would have to manually explore API docs, learn available endpoints, and hardcode what their apps can do.

MCP vs API: Standardization and Uniformity

One of MCP’s strengths is its consistent interface. What I mean is every MCP server follows the same protocol, regardless of the service it connects to (like GitHub, Spotify, Docker, a private database, or Google Maps). That means the same model can call different tools using the same method.

APIs, by contrast, are usually inconsistent in their interface. Each API has its endpoints (e.g., /books/123, /users/me, /products/search), HTTP methods (e.g., GET, POST, PUT, DELETE), Authentication (e.g., OAuth tokens for Google APIs, API keys for OpenWeatherMap, HMAC signatures in Stripe), and Error formats (e.g., a 404 with {“error”: “Not Found”} in one API vs a 500 with {“message”: “Server Error”} in another).

Because of this lack of standardization, it’s hard for AI agents to use multiple APIs without custom adapters for each one. Adaptability and Evolution

MCP is adaptive by design. Since AI agents work in real-time and respond to context, MCPs allow them to discover new tools dynamically, adjust to different data structures, and remain functional even as services evolve. So if a server adds a new tool, the AI agent can start using it immediately.

Compared to APIs, if the API changes (like a new endpoint or different parameter format), the client must be updated manually. I think that limits their use in fast-changing, AI-driven environments.

MCP vs API in Agent and Workflow Architectures

In 2026, many applications are built as multi-stage workflows or agent systems rather than simple request-response services. In these architectures:

- APIs serve as integrations or plugins that specific steps in a workflow or agent routine call — for example, dispatching a model call, posting to a queue, or querying a vector database.

- MCPs provide the runtime, scaling, observability, and governance that tie these API calls together into robust end-to-end systems.

Instead of choosing MCP vs API, teams often use both: APIs for fine-grained capabilities and MCPs to orchestrate and manage them at scale.

| Aspect | MCP | API |

| User Type | AI agents (LLMs) | Human developers |

| Discovery | Dynamic | Manual |

| Standardization | High (one format for all tools) | Low (each API is different) |

| Adaptability | Real-time, auto-adjusts | Manual updates needed |

| Usage Layer | Wraps APIs | Base communication layer |

How do MCP and APIs Work Together?

There are clear differences, but MCP and APIs can work together. I’ll show you how below:

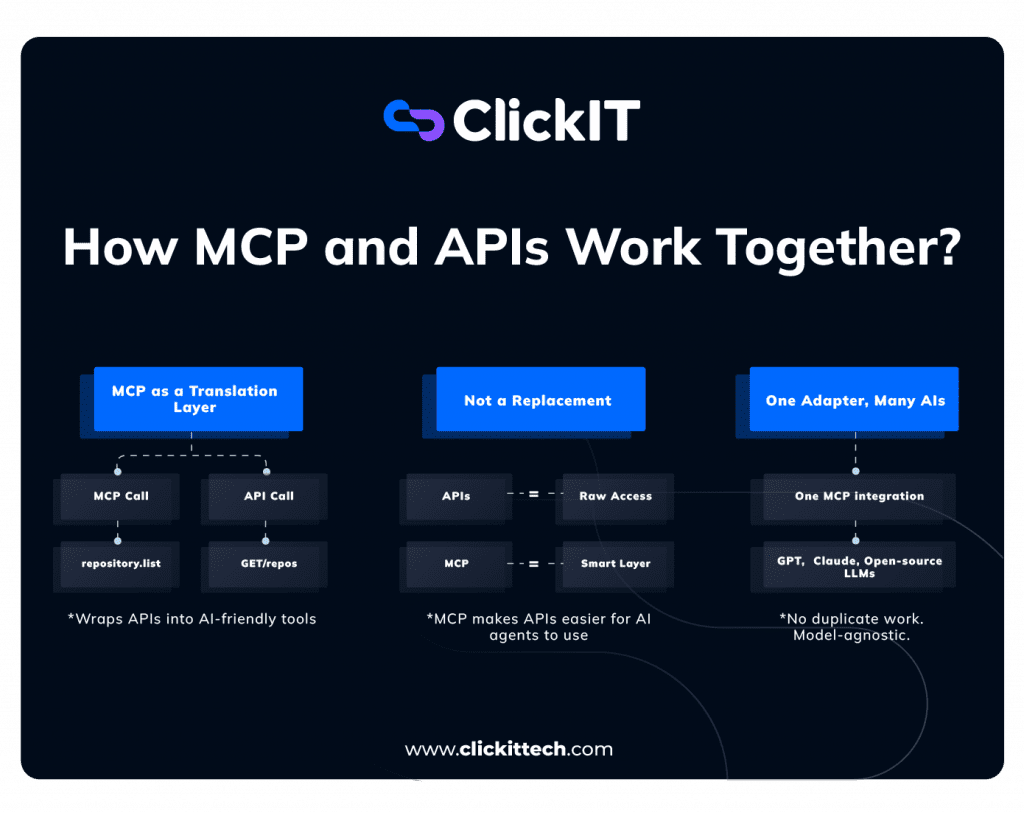

MCP as a Translation Layer

MCPs often wrap existing APIs. Many MCP servers are just translation layers that convert MCP format calls into REST API calls behind the scenes. For example, an MCP server connected to GitHub might expose a repository.list tool. Under the hood, it translates that into a REST API request to GET /repos.

No Replacement, but Enhancement

Rather than trying to replace APIs, MCP enhances their usability in LLM ecosystems by making them more discoverable, uniform, and flexible. So in my opinion, it’s not MCP vs API in a winner-takes-all sense. They’re actually complementary layers in my AI stack.

I see it as APIs can provide the raw access to external systems, and MCPs reshape that access into a structure that AI agents can reason over and use.

One Adapter, Many AIs

Because MCP follows a standard format, one integration works across multiple AI models and agents. Let’s say I build an MCP adapter for a database. That adapter can then be used by a GPT-based AI assistant, a Claude-powered chatbot, or an open-source LLM running locally.

I don’t need to rebuild the integration each time. This model-agnostic design saves my engineering time and accelerates AI development.

When to Choose MCP vs API?

Choose an API when you need granular control, custom integration, or want to build a specific service from scratch. APIs are ideal for embedding capabilities into bespoke applications, tooling, or internal microservices.

• Choose an MCP when you want a managed platform with hosting, scaling, monitoring, and built-in orchestration. MCPs reduce operational overhead and provide enterprise capabilities such as governance, observability, and automated scaling.

• Choose a hybrid approach when you want the flexibility of APIs but the operational robustness of an MCP. Many teams use APIs within MCP-managed workflows or agent runtimes to achieve both control and reliability.

FAQs about MCP vs API

No. MCPs are not here to replace APIs. An MCP server often wraps an existing API and translates it into a format that AI agents can understand and use. So, MCPs actually leverage APIs.

MCP is not a REST API. It may use HTTP but follows its own protocol that exposes tools and context in a way LLMs can reason over.

Use an API when you’re building traditional software applications where human developers write code to consume known endpoints. Use MCPs when your primary “user” is an LLM that needs dynamic access to external context or tools.