LLM cost optimization is about minimizing the costs associated with large language models while maintaining or improving performance. This includes utilizing Retrieval-Augmented Generation (RAG) to access external data sources, employing smaller models for specific tasks through model distillation, caching responses semantically, and using techniques such as quantization and advanced prompt engineering.

- Choose the right model for each job; don’t overpay for GPT-4 if a lightweight model or a fine-tuned open-source alternative can do the job at a fraction of the cost.

- Optimize prompts to be concise and specific, so you send fewer tokens (each token incurs a cost). Trim unnecessary words and leverage techniques like instruction chaining only when necessary, as every token (input and output) incurs a fee.

- Employ infrastructure and caching techniques: run non-urgent tasks in batch (cheaper) modes, utilize auto-scaling to prevent idle compute, and cache frequent queries with tools like GPTCache to avoid redundant calls.

- Use monitoring tools to track usage and spend (e.g., WandB’s WandBot, Honeycomb). They help identify which prompts or models are driving costs so you can adjust in real-time.

In my work with tools like LangChain and LlamaIndex, the OpenAI SDK, and Hugging Face Transformers, I’ve learned how different frameworks affect compute, latency, and cost, especially when dealing with large resource-hungry LLMs.

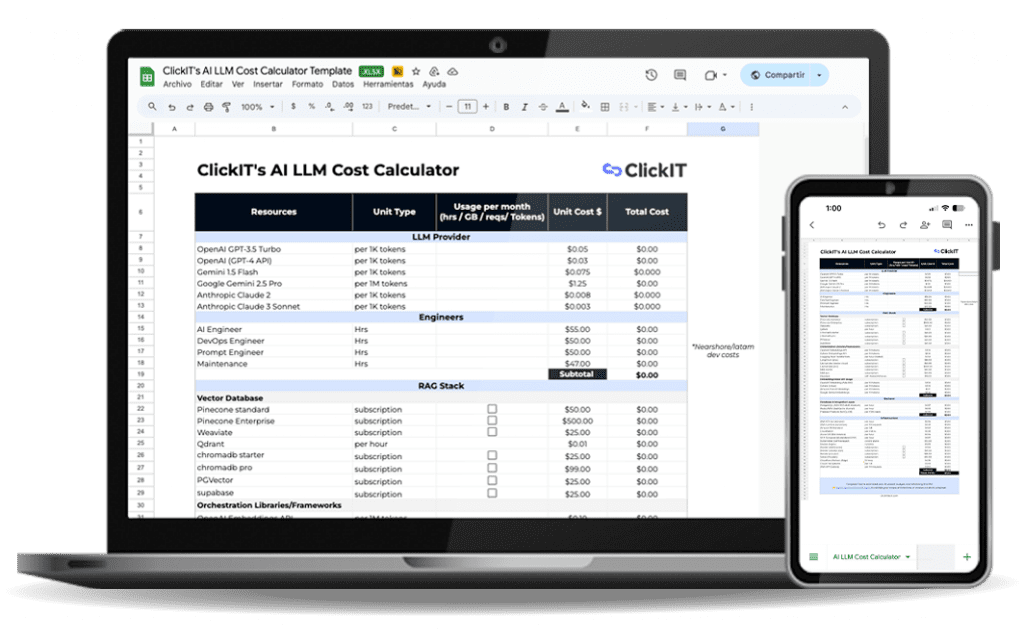

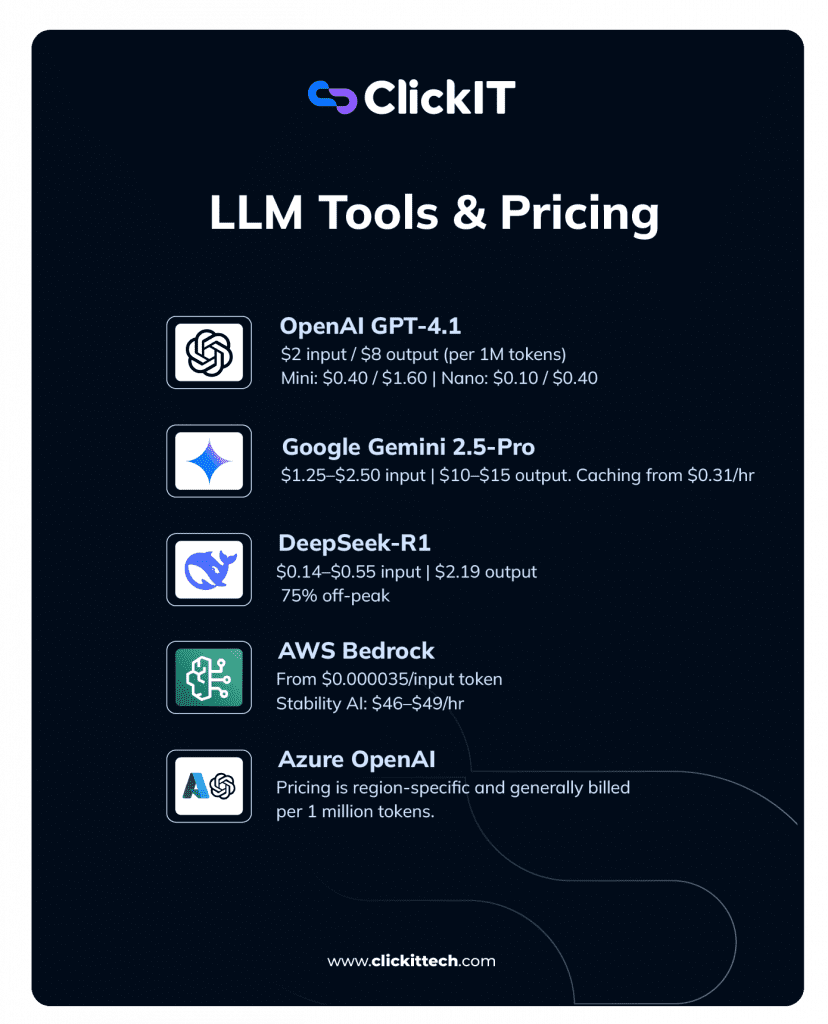

So in this quick post, I’m going to talk about the importance of LLM cost optimization and compare LLM pricing from OpenAI, Google, DeepSeek, AWS, and Azure.

- What LLM Pricing Tools Are Available, and How Do You Choose the Best One?

- What Are Effective LLM Cost Optimization Strategies?

- FAQs

| Category | Strategy | Tools / Examples |

| Model Selection | LLMLingua, manual prompt compression, Prompt rewriting, specific task framing | GPT-3.5, Claude Instant, fine-tuned SLMs, LLaMA, Mistral, Phi-2Open-source LLMs (e.g., Ollama, vLLM) vs. SaaS APIs (OpenAI, Anthropic, Cohere) |

| Prompt Optimization | Align compute to usage pattern. Track and adjust based on actual usage | Minimize token usage. Avoid verbose/redundant prompts |

| Infrastructure Tuning | Align compute to usage pattern.Track and adjust based on actual usage | Real-time vs batch setups on CPUs/GPUs/TPUs.Auto-scaling, usage-based infrastructure decisions |

| Caching | Semantic caching to reduce redundant API calls. | GPTCache, LangChain cache layer.Metrics: hit ratio, latency, recall |

| Retrieval-Augmented Generation | Use RAG to reduce token payloads | Vector DBs (Pinecone, Weaviate, FAISS), LangChain, LlamaIndexRAG pipelines: Embed → Store → Retrieve → Prompt |

| Monitoring & Observability | Track LLM usage, latency, and spend | WandBot (Weights & Biases), Honeycomb, Paradigm, Prometheus + GrafanaCost-per-query analysis, inefficient prompt detection |

What LLM Pricing Tools Are Available, and How Do You Choose the Best One?

Large Language Models (LLMs) are expensive to run, especially at scale. The main factors that drive their cost are the size of the model, the number of requests you make, and the compute resources required to generate each response.

Most LLM providers use a token-based pricing model. Tokens represent chunks of text (typically a word, part of a word, or sometimes even punctuation). You’re charged for input tokens (the text you send in, like your prompt) and output tokens (the model’s response). The more tokens involved, the more you pay. Some providers also offer tiered pricing plans based on volume, with lower per-token rates for higher usage tiers.

Worried about LLM costs?

Let our AI engineers help you optimize every token.

👉 Hire AI experts

OpenAI LLM pricing

OpenAI charges based on the number of tokens processed, covering both input (your prompt) and output (the model’s response).

| Model | Input / 1M Tokens | Output / 1M Tokens | Cached Input / 1M Tokens |

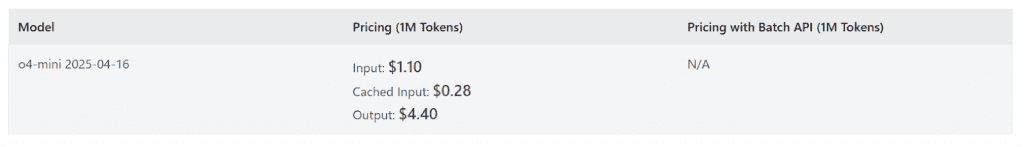

| GPT-4.1 | $2.00 | $8.00 | $0.50 |

| GPT-4.1 Mini | $0.40 | $1.60 | $0.10 |

| GPT-4.1 Nano | $0.10 | $0.40 | $0.025 |

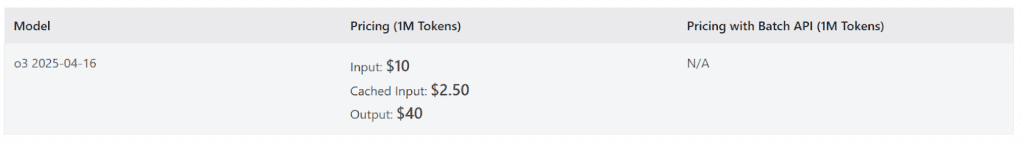

| OpenAI o3 | $10.00 | $40.00 | $2.50 |

| OpenAI o4-mini | $1.10 | $4.40 | $0.275 |

If your use case doesn’t require real-time responses, OpenAI’s Batch API offers 50% off both input and output token costs. This asynchronous method processes requests over a 24-hour window, making it ideal for large non-urgent workloads like bulk document processing or research queries.

Google Gemini 2.5-Pro

Pricing for Google’s Gemini 2.5-Pro is based on the number of tokens in your prompt and response, with rates increasing for larger prompt sizes.

| Usage Type | ≤ 200K Tokens | > 200K Tokens |

| Input | $1.25 | $2.50 |

| Output (incl. thinking tokens) | $10.00 | $15.00 |

| Context Caching (per hour) | $0.31 | $0.625 |

| General Caching (flat rate) | — | $4.50 / 1M tokens/hr |

DeepSeek’s DeepSeek-R1

With DeepSeek-R1, a reasoning-optimized model, I paid $0.14 per million input tokens when there was a cache hit and $0.55 when there wasn’t. Output tokens cost $2.19 per million regardless of cache status.

Interestingly, DeepSeek’s off-peak pricing offers a 75% discount on requests completed between 16:30 and 00:30 UTC daily. The discount is applied based on the completion timestamp of each request (not when it’s submitted). So, timing your requests around this window can save you a lot of money.

AWS Bedrock pricing

I’ve had to factor in two pricing models: on-demand (which includes batch processing) and provisioned throughput. On-demand is straightforward; you’re charged per 1,000 tokens processed, and it’s good for fluctuating workloads. You can use this for lighter, ad-hoc inference tasks.

Provisioned throughput is the way to go when you need consistent performance. It requires committing to a one-month or six-month reservation of model units, billed hourly. The longer the commitment, the lower the rate, so it’s good for production-level usage.

Bedrock pricing also varies by model provider. For example:

- AI21 Labs models range from $0.0002 to $0.0188 per 1,000 tokens.

- Cohere charges between $0.0003 and $0.0020 per 1,000 tokens.

- Amazon’s models, like Nova Micro and Nova Pro start at $0.000035 per 1,000 input tokens, up to $0.0032 per 1,000 output tokens.

- Stability AI’s SDXL1.0 is compute-heavy and billed hourly. $49.86/hr with a 1-month commitment or $46.18/hr with a 6-month term.

Beyond inference, I’ve seen costs add up through model customization, storage (like S3 for datasets), and data transfer, especially when using other AWS services or Bedrock features like Guardrails, Flows, or Knowledge Bases.

Check AWS’s official pricing page and regional availability listings for more.

Azure OpenAI pricing

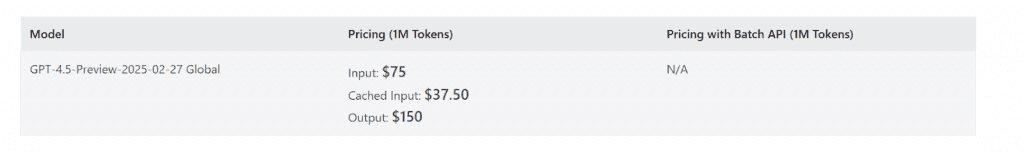

Azure has several OpenAI models under different categories, each for specific tasks. Pricing is region-specific and generally billed per 1 million tokens, unless otherwise stated. Below are the key offerings:

- o3: Pricing per 1M tokens is as in the image below.

- O4-mini: Pricing per 1M tokens is as in the image below.

- GPT-4.1 series: A general-purpose model with a 1 million token context window. Pricing per 1M tokens is as in the image below.

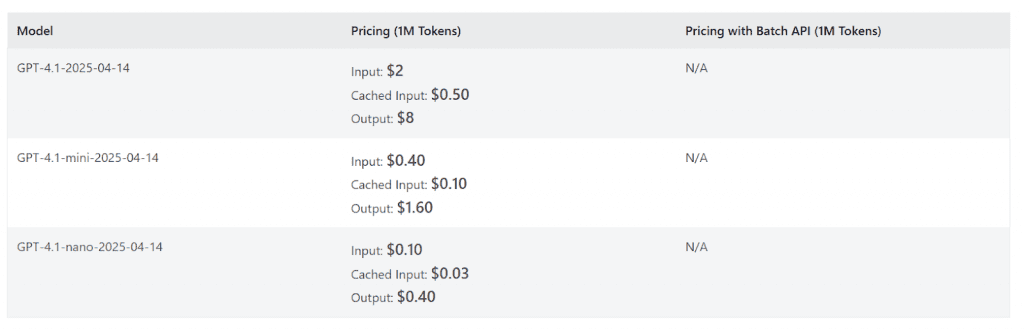

- GPT-4.5: 128K context and an October 2023 cutoff. Pricing per 1M tokens is as in the image below.

For other models like o1, o3-mini, and region-specific pricing details, you can check the full list here.

Need a smarter AI setup?

We build efficient, low-cost LLM solutions with LangChain, RAG, and more.

👉 Talk to our team

What Are Effective LLM Cost Optimization Strategies?

With new models and architectures dropping every week, it’s easy to get lost. Here are some LLM cost optimization strategies I would choose if I had to make usage more efficient without sacrificing performance:

Choose the Right Model for the Job

One of the best ways to reduce LLM costs is not to overengineer your solution. Bigger models like GPT-4 or o3 can be cool, but they come with higher costs and more compute overhead. In many cases, you don’t need all that power.

I’ve found that matching the model to the task goes a long way. If you’re running basic tasks like sentiment analysis, Named Entity Recognition (NER), or text summarization, a smaller, task-specific model (fine-tuned for that job) can do just as well or better. It also runs faster and is way cheaper.

How I think about LLM model selection:

- Use-Case First: Before choosing a model, I ask: What’s the task? Do I need general reasoning or something narrow like classification or retrieval?

- Cost vs. Value: If I’m building something that runs constantly (like a chatbot), I factor in per-token cost, latency, and inference efficiency. Sometimes a less “powerful” model is the best value.

- Open-Source vs. SaaS: I weigh options like running a fine-tuned LLaMA model on my own infrastructure (with potentially lower cost at scale) versus calling an API like GPT-4. Security, usage patterns, and hosting costs all matter here.

Ultimately, choosing the right model for your use case, not what’s popular, will go a long way toward optimizing LLM cost.

Optimize Your Prompts to Reduce Token Usage

Long-winded or poorly structured prompts can quietly add up since you’re charged based on the number of tokens processed (input + output). Tokens include everything (words, punctuation, even spaces), so every character counts.

To save, I aim for prompts that are concise, specific, and well-scoped. No extra fluff, no vague instructions.

Prompt compression tools like LLMLingua can also help reduce prompt length. That said, advanced prompting techniques like chain-of-thought (CoT) and in-context learning (ICL) naturally make prompts longer. They’re often worth it for complex tasks, but be aware of the cost tradeoff. You can read more about those techniques in our advanced prompt engineering techniques blog if you’re looking to go deeper.

Employ Cost-Saving Techniques in Infrastructure & Usage

I’ve found that matching infrastructure to how the system is actually used can save a lot.

- Separating batch workloads from real-time ones is a good strategy. That’s because batch jobs can run on lower-cost setups without sacrificing performance, while real-time use cases get the faster infrastructure they need.

- Also regularly monitor usage and spending patterns. That way, I can adjust resources based on demand, avoid over-provisioning, and ensure that I’m not paying for more than I use.

- Use is semantic caching. In many applications, users repeat the same kinds of questions (greetings, FAQs, or other standard prompts). Instead of sending those to the LLM every time, use tools like GPTCache or Langchain’s caching utilities to store and serve previously generated responses. This reduces LLM calls, token usage, and response time.

Caching isn’t perfect (you might get false hits or misses), but GPTCache gives you metrics like hit ratio and latency to keep things in check.

Use RAG instead of sending everything to the LLM

Instead of loading the entire context into the prompt, RAG pulls only the most relevant information from a vector database and feeds that to the model. This reduces token usage.

Here’s how RAG works in LLM cost optimization

- When a user sends a query, the RAG system first searches a pre-indexed database to find the most relevant snippets or passages. These are then combined with the original query and passed to the LLM. With this extra context, the model generates a more accurate and informed response.

- This offloads much of the heavy lifting to the retrieval layer. Instead of asking the LLM to “remember” everything, we’ll only pass in what’s needed for that moment.

RAG also improves quality. Since the LLM generates based on real-time, relevant data it can produce better responses without relying on its full training set.

Of course, RAG does take some setup and ongoing maintenance, but in my experience, the long-term savings and the boost in response quality make it more than worth the effort.

Use Monitoring Tools and Cost Management

Monitoring helps you:

- See which models are consuming the most budget

- Detect inefficient prompts that generate too many tokens

- Track cost-per-query across different use cases

- Find opportunities to cache or switch to lower-cost models

Tools like Weights & Biases’ WandBot, Honeycomb, and Paradigm offer observability platforms that track metrics like token usage, latency, and cost-per-query. These insights help you identify inefficiencies and guide optimization efforts.

You can also integrate general monitoring tools like Prometheus and Grafana to track system-level performance and resource utilization.

Want to save on LLM costs?

ClickIT’s AI team builds fast, scalable, and cost-optimized solutions.

🔗 Let’s work together

FAQs

LLM cost optimization is about reducing the cost of using large language models without sacrificing output quality, through model selection, prompt tuning, caching, infrastructure tuning, and monitoring.

Input tokens are in the prompt you send to the LLM, output tokens are in the response. Both count towards the cost, including tokens generated during intermediate reasoning.

Compare input/output token rates, context limits, and speed. Also, consider your content length and usage patterns.

API pricing is the cost charged by a provider when you use their model via API. Pricing varies by token usage, model, call volume, and subscription tiers.