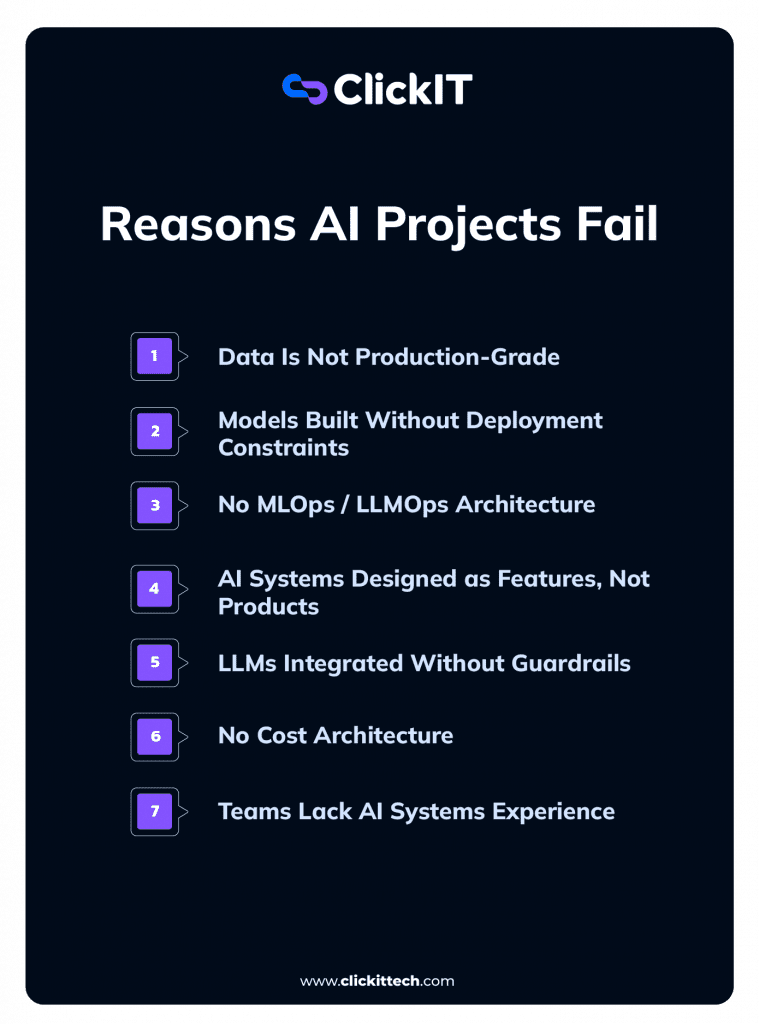

Your next AI project is more likely to fail than succeed. Gartner, MIT, and RAND have all published research showing that somewhere between 60% and 95% of AI initiatives either stall, get abandoned, or die after launch. That number is expected to stay stubbornly high through 2026. So, why do AI projects fail?

When people say an AI project failed, they usually mean one of three things:

- It never reached production

- It was deployed and abandoned,

- It was deployed but never adopted/trusted.

After watching hundreds of AI initiatives across startups and large enterprises, I’ve noticed the problem is rarely the model itself. It’s the system around it.

- Summary Table of Why AI Projects Fail and Their Solutions

- Why is there confusion about PoC in AI Projects?

- Failure #1: Data Is Not Production-Grade

- Failure #2: Models Built Without Deployment Constraints

- Reason #3 Why AI Projects Fail: No MLOps / LLMOps Architecture

- Failure #4: AI Systems Designed as Features, Not Products

- Failure #5: LLMs Integrated Without Guardrails

- Failure #6: No Cost Architecture

- Failure #7: Teams Lack AI Systems Experience

- ClickIT as a Nearshore AI Company

- What Successful AI Teams Do Differently to Avoid Failure (Technical Playbook)

Summary Table of Why AI Projects Fail and Their Solutions

TL;DR, here’s a quick table for each failure and how to avoid it:

| Failure | How to Avoid It |

| Data is not production-grade | -Treat data infrastructure as part of the product. -Implement feature stores for unified feature definitions and enforce data contracts -Use automated data validation to catch quality issues. |

| Models Built Without Deployment Constraints | -Design with latency budgets, cost per inference, and throughput from day one. -Use model quantization (FP32→INT8) and distillation to reduce inference costs. -Implement batching and caching, plan for token growth in LLMs. |

| No MLOps/LLMOps Architecture | -Always assume new models will drift. -Use model registries to track versions and lineage. -Build automated CI/CD pipelines with model versioning and registries |

| AI Systems Designed as Features, Not Products | -Design AI as a full system, end-to-end. -Implement HITL workflows with risk-based triggering and clear Manual Fallback paths. |

| LLMs Integrated Without Guardrails | -Always build constraints into the architecture. -Use Tool-Scoped Permissions, deterministic validation layers, and Structured Outputs to constrain model behavior. |

| No Cost Architecture | -Implement caching strategies and Intelligent Model Routing to send simple tasks to cheaper, smaller models. -Use tiered intelligent routing to direct simple queries to smaller models and complex queries to frontier models. -Set hard API limits to prevent runaway spending. |

| Teams Lack AI Systems Experience | -Build Cross-Functional Teams with clear distinctions between ML Engineers, AI Architects, and Platform Engineers. -Make use of nearshore teams for their vast and cost-effective expertise. |

Why is there confusion about PoC in AI Projects?

One of the biggest misconceptions I see is confusing proof of concept (PoC) success with production readiness.

A PoC validates that something can work in a controlled environment. Production answers much harder questions: can this survive real users, messy data, shifting requirements, and operational constraints?

Sadly, most AI PoCs I’ve seen are designed as disposable demos. They have impressive accuracy numbers, but collapse the moment they hit production. That’s because they assume perfectly structured data, stable inputs, and cooperative users.

Important elements like logging, monitoring, versioning, and guardrails are deferred until “later,” which usually means never. If your PoC doesn’t have observability from the first commit, it’s not a prototype of a real system.

Another common confusion I see is mistaking model accuracy for business impact. A high offline accuracy score means very little if it’s not trusted, adopted, or integrated into workflows. These systemic failures explain why so many teams walk away scratching their heads after “successful” pilots.

Click IT’s AI Playbook is designed to catch the problems that cause AI project failure before they hit production. If you’re building or planning AI without a clear risk picture, you’re already exposed.

Failure #1: Data Is Not Production-Grade

The fastest way to kill an AI project is to underestimate how different production data is from PoC data. I always say if your data isn’t production-grade, your AI system never will be.

In theory, your dataset is clean, consistent, and well-labeled. In production, it’s noisy, incomplete, delayed, and constantly changing.

Models trained on idealized data struggle with volume, velocity, and variability, which leads to immediate performance degradation and model drift.

I usually see three technical issues show up repeatedly.

No Unified Data Schema

When teams pull from multiple systems with different formats and definitions, the model learns from inconsistent signals. Over time, the pipelines built to reconcile these differences become fragile and expensive to maintain, creating long-term technical debt.

Inconsistency in Feature Definitions

When you’ve got data scientists, engineers, and business stakeholders using the same term to mean different things, training and serving pipelines becomes tricky.

Unfortunately, this mismatch is usually not discovered until deployment, when the prototype cannot be integrated into production systems without major rework.

Training-Serving Skew

Many AI project prototypes are trained on historical or curated data that does not reflect live inference conditions. This is called training-serving skew.

Label leakage can then inflate early results by letting the model learn from signals that won’t be available at inference time. From day one, inference data behaves differently, and performance drops.

So what actually works?

Treating data infrastructure as a first-class part of the system, not an afterthought. Feature stores help by creating a single source of truth for features across both training and inference, significantly reducing training-serving skew and enabling reproducible experiments through versioning.

In addition, data contracts help formalize expectations between data producers and consumers, preventing schema changes from quietly breaking downstream models in production.

On top of that, implementing automated data validation enforces quality at scale. This has actually helped my team catch incomplete, out-of-range, and malformed data before it reaches a model.

Failure #2: Models Built Without Deployment Constraints

I’ve seen plenty of AI models that work beautifully in notebooks and then die in production because they were never designed with deployment constraints in mind.

Latency is usually the first reason why that happens. Every production system has a latency budget, even if nobody has written it down. When a model exceeds that budget, it becomes the bottleneck.

I’ve seen this break otherwise solid projects in search, fraud detection, recommendations, and decision automation. If a model cannot return a response within the time window of the surrounding workflow, it doesn’t matter how accurate it is. Because the system cannot meet its SLAs, the feature gets disabled or removed.

What is the Cost Per Inference in AI Projects?

Cost per inference is another constraint teams discover too late, and is a common reason why AI projects fail. Today, inference accounts for 80 to 90 percent of total AI compute spend, according to MIT. To reduce these costs, your team should consider implementing model quantization.

Converting models from 32-bit floating-point (FP32) to 16-bit (FP16) offers 2x reduction, while quantizing to 8-bit integer (INT8) achieves up to 4x memory reduction with less than 1% accuracy loss.

Model distillation is another common approach. This is when a smaller “student” model is trained to replicate the behavior of a larger model, allowing your team to serve most requests at a fraction of the cost while reserving heavyweight models for genuinely complex cases.

As usage grows, even small inefficiencies compound fast. A model that looks affordable at low volume can burn through cloud budgets at scale.

Many projects die not because the model is wrong, but because the cost per query exceeds the value it creates. This is especially common when teams fail to explicitly model the tradeoffs between model quality, inference performance, and economic cost.

Why AI Projects Fail for Lack of Batching Strategy?

Without a batching strategy, GPUs spend more time waiting than computing. This effectively means throughput collapses, effective cost per request spikes, and expensive infrastructure sits underutilized.

For example, I once witnessed a team shift from single-request experiments to real-time production traffic, only to discover that their architecture could not handle concurrent workloads. The result was a painful redesign after launch.

If you’re working with LLM-based systems, then there are other failure modes to consider.

Token explosion is a frequent one. That is:

- Uncontrolled context growth caused by wasteful retrieval strategies

- Excessive padding, lack of caching, and tokenizer drift across model versions can increase costs

- This leads to performance degradation

- And push requests past context limits

Eventually, you start seeing 429 rate-limit errors, truncated responses, or answers that feel “off” in ways that are hard to debug.

Prompt Brittleness in LLMs

Prompt brittleness is when system behavior depends on fragile prompts with no clear evaluation strategy. This can make outputs unreliable. Small changes in input can lead to unpredictable responses.

I recommend:

- Using structured prompts (like XML, JSON structure, or strict templates),

- Enforcing schema-constrained outputs (tools like Instructor, Guardrails AI, or JSON-mode are great)

- Prompt versioning (Git)

- Automated regression tests (such as running evaluation suites like prompt evaluation datasets) to address it.

Nondeterminism Breaks Traditional QA

For critical paths, nondeterministic outputs create real operational risk. Inconsistent results break traditional testing and QA workflows. This means that the same input no longer guarantees the same output, rendering regression testing meaningless.

Parsers can also fail. This is when a single unexpected token or format shift can crash downstream services. In addition, safety checks become unreliable (guardrails pass one run and fail the next) and compliance guarantees erode (you can’t confidently attest to behavior you can’t reproduce).

In multi-agent systems, this variability often cascades (e.g., one agent’s slightly incorrect output becomes another agent’s trusted input), amplifying small errors into system-wide failures that are difficult to trace or roll back.

Multi-Modal Model Failures in AI

If you’re working with vision-language models or other multi-modal systems, there’s an additional layer of brittleness.

Common issues here include vision encoders misinterpreting context (a product photo mistaken for a document), cross-modal hallucinations (the model “sees” text that isn’t in the image), and modality imbalance (where the model over-relies on one input type and ignores the other).

These failures are harder to catch in testing because they often emerge only with specific combinations of inputs that your evaluation set didn’t cover.

How to solve it?

- Have a production mindset from the onset

- Model inference costs early before launch.

- Design fallback logic when models fail or time out.

- Plan for graceful degradation so the system remains usable even when AI components misbehave.

At scale, these decisions matter more than model choice.

Reason #3 Why AI Projects Fail: No MLOps / LLMOps Architecture

The pattern I see over and over is teams treating AI like a static asset. They deploy a model, ship a demo, and move on. That mental model sometimes works for traditional software (not always), but it is one of the reasons why AI projects fail.

Models are dynamic systems. As real-world data shifts, their behavior shifts with it. According to Microsoft, AI model performance degrades by up to 40 percent per year due to data drift alone! It’s even possible for a model to become outdated in a week.

That’s why implementing MLOps or LLMOps helps to improve your AI over time.

Continuous Integration and Continuous Deployment (CI/CD) in MLOps/LLMOps

A model that performs correctly in staging can fail in production due to differences in data formats, dependency versions, or infrastructure constraints such as exhausted connection pools under real traffic.

Without automated integration and deployment pipelines, these issues go undetected until users are already affected. Continuous testing, including data validation, performance checks, and latency profiling, is what prevents regressions from reaching production.

In practice, this means you should have automated model pipelines that validate inputs, test inference behavior under load, and deploy new versions gradually using canary releases rather than full cutovers.

Lack of Model Versioning as a Reason Why AI Projects Fail

Model versioning is another common reason why AI projects fail. If you can’t trace which combination of model weights, training data, feature definitions, and code produced a specific output, your debugging becomes largely guesswork.

What happens next is your teams retrain models, overwrite artifacts, and may accidentally ship worse versions without realizing it.

So, how do you solve this?

- Implement Production-Grade Model Registries:

It’s important to use a model registry to track model versions, training data lineage, and evaluation metrics over time. Production-grade model registries like MLflow, Neptune.ai, or AWS SageMaker Model Registry provide versioning, lineage tracking, and deployment management.

This enables comparing models side-by-side, rolling back safely, and auditing behavior after incidents.

- Build Continuous Observability:

Observability also needs to go beyond uptime or accuracy alone. Reliable teams continuously monitor model-level signals, including data and concept drift, bias shifts, confidence distributions, output stability, latency, and cost per request.

Tools like Arize AI, Fiddler, or WhyLabs automate this monitoring and alert teams to degradation or fairness issues before users lose trust.

The practical implication is to plan for the inevitable failure. It’s highly likely that new models will fail in production. Without fast rollbacks to a known-good version, outages last longer than they should, stakeholders lose confidence in the system, and your engineering time is actually spent on incident response rather than building.

LLMOps follows the same principles but with slightly different failure modes.

- Prompts need versioning because small changes can materially affect behavior.

- Evaluations should run automatically on representative inputs to catch regressions early.

- Hallucinations need systematic monitoring, and token consumption must be tracked alongside traditional performance metrics.

With proper MLOps and LLMOps in place, your AI systems become debuggable, testable, and operable over time, rather than fragile demos that degrade after launch.

Failure #4: AI Systems Designed as Features, Not Products

Another common reason AI projects fail is that teams treat AI like a plugin you bolt onto an existing product rather than a system you design end-to-end. That almost always leads to architectural problems later.

Importance of HITL Design in AI Projects

One of the first gaps is the absence of a human-in-the-loop (HITL) design. HITL refers to systems where humans actively participate in supervision or decision-making at specific points in the AI workflow.

In practice, this is how you add accountability, context, and judgment to automated decisions. Without it, your system has almost no corrective mechanism. Bad outputs flow directly into downstream processes or back into retraining data, accelerating bias and reinforcing incorrect behavior.

Hence, the model starts optimizing for data patterns rather than real-world intent, and users quickly stop trusting it. Unfortunately, once trust is gone, adoption follows.

How to solve it?

To design HITL correctly, I recommend that your team do the following:

- Explicitly define where humans must approve, override, or correct model outputs. This is called risk-based triggering. For example, in high-risk decisions or low-confidence predictions (like in healthcare diagnostics, legal compliance, or financial transactions).

- Design confidence thresholds and escalation paths (e.g., if model confidence falls below a threshold, route the task to a human reviewer rather than forcing an automated decision).

- Make sure human feedback is structured and logged (labels, corrections, reasons), so it improves future behavior rather than introducing noisy retraining data.

- Lastly, make your HITL workflows operationally viable. What I mean is have clear ownership, reasonable review latency, and tooling that fits your already existing processes.

No Measurable Thresholds in AI

Another architectural flaw I see is the lack of measurable thresholds for safe or reliable operation. Many systems don’t know when they’re operating outside their competence. Without calibrated confidence scoring, models confidently produce incorrect outputs instead of signaling uncertainty.

In high-stakes environments such as autonomous driving, fraud detection, credit underwriting, or clinical triage, failing to trigger alerts or escalate when performance falls below acceptable thresholds can lead to serious financial or physical harm.

Not Designing Manual Fallback Paths

Concurrently, when AI is treated as a feature, teams also forget to design manual fallback paths. For example, if a user flags an incorrect product classification, a faulty support response, or a misrouted transaction, there’s usually no override mechanism.

Without a way to intervene, errors persist, operations stall, and teams end up disabling the system entirely.

To avoid these two architectural flaws:

- Use calibrated confidence scoring to define when the system should defer

- Implement explainability hooks to make decisions auditable.

- Build feedback loops that allow humans to correct mistakes and feed that signal back into retraining.

Failure #5: LLMs Integrated Without Guardrails

Large language models generate outputs based on patterns in their training data. Without constraints, they can produce harmful, biased, or incorrect responses, causing technical, ethical, and legal risks.

A striking example we’ve all just seen in 2026 was when xAI’s Grok generated nonconsensual, sexualized “undressing” images of users, including minors. That’s a glimpse of what happens when systems operate without sufficient guardrails.

Other common production failures I’ve experienced include prompt injection, where attackers manipulate the model to ignore its core instructions. This can expose system prompts or trigger unintended actions.

Over-permissive tool access is another issue. It allows an AI to misuse APIs, web searches, or file systems, putting sensitive data at risk.

Additionally, an LLM without guardrails can cause text-to-SQL failures, leading to malicious queries that access, modify, or delete database records, bypassing traditional controls.

Security oversights, such as missing sandboxing that isolates the model from critical systems and absent role-based context controls that prevent the model from seeing data it shouldn’t, make these risks worse.

How to Build Guardrails into AI

I always recommend building constraints into your AI from the start.

- Firstly, use tool-scoped permissions to enforce least-privilege access, so even if a model is manipulated, it can only act within narrow boundaries.

- Also, implement read-only database views to limit what the AI can see and change. Structured outputs, like JSON schemas, turn probabilistic LLM outputs into predictable, validated formats.

- Finally, I recommend adding deterministic validation layers to check actions against business rules and catch unsafe operations before they reach production.

With these guardrails, your LLM can become a dependable part of your system instead of a stochastic parrot that unexpectedly compromises safety, data, or user trust.

Failure #6 No Cost Architecture

Earlier, I gave a brief overview of the problems that come from the cost in AI development. I’ll go a bit deeper here.

One of the most overlooked reasons why AI projects fail is cost. Teams usually underestimate the major AI and LLM cost drivers like token usage growth, retraining frequency, and data egress.

Most failed AI initiatives I’ve encountered are driven top-down. An executive sees a demo, mandates “AI everywhere,” and the team scrambles to bolt a model onto existing workflows.

But when the cloud bill comes due, your CFO shuts down the project before it can deliver any value.

Cost problems like token counts can balloon due to inefficient prompts or unbounded context windows. Models also need frequent retraining, or they degrade into the No MLOps / LLMOps problem we discussed earlier. And moving data in and out of cloud services (data egress) can add tens of thousands of dollars to monthly bills if left unchecked.

Thankfully, there are some technical strategies I’ve seen work that can reduce these costs.

How to Optimize Costs When Building Your AI Project

- Caching is essential. By storing and reusing previous inputs and outputs, you bypass expensive recomputation. Semantic caching, prompt caching, response caching, and key-value caching all reduce latency while lowering operational costs.

- Intelligent model routing also helps. Instead of sending every request to your most expensive model, successful teams explicitly route simple tasks to smaller, cheaper models and escalate only genuinely complex queries to higher-capacity models.

This means defining routing rules based on task complexity, confidence thresholds, latency requirements, or user impact. Use strategies like

- Semantic and Intent-Based Routing. That is, use a lightweight embedding model (like all-MiniLM-L6-v2) or a “router LLM” to analyze the user’s intent in milliseconds.

- Tiered Escalation: Level 1 would be routine tasks, like “summarize this email,” which will be routed to SLMs like Gemini 2.0 Flash or Llama 3.1 8B. For Level 2, your team can route standard RAG (Retrieval-Augmented Generation) or multi-step tasks to mid-tier models like Claude 3.5 Sonnet.

Level 3 reserves frontier models like GPT-5 or Claude Opus 4 for high-stakes reasoning, complex coding, or creative strategy.

- Fallback & Failover: The router should also act as a safety net. If a primary model times out or returns a low-confidence score, the router automatically fails over to a secondary provider to maintain 99.9% availability.

- Where possible, on-device or open-source models reduce recurring API fees, especially for high-volume, specialized tasks.

- Tracking cost-per-action at a granular level (like tagging requests by feature, user, or team) lets you measure ROI accurately and spot inefficiencies.

- Also, setting hard limits via API gateways, like per-user or daily caps, will prevent runaway costs.

- With a proper cost architecture, your AI system stays sustainable and predictable, allowing you to scale without shocking your finance team.

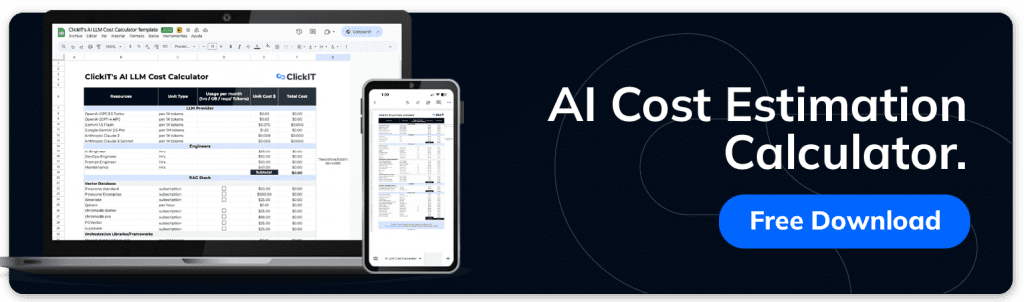

If you’re worried about costs, our AI Cost Estimation Calculator helps teams plan budgets and manage operational efficiency from day one. You can also get a quote for your next AI project immediately.

Failure #7: Teams Lack AI Systems Experience

Knowing machine learning is not the same as building AI products. I’ve seen projects fail when a single data scientist is expected to own everything. Sometimes there’s only one ML engineer who’s responsible for everything, from data pipelines to model deployment to API integration.

For clarity, ML engineers focus on model training and evaluation, AI architects design the end-to-end system to ensure reliability, scalability, and integration, and platform engineers manage infrastructure, deployment, monitoring, and MLOps pipelines. Without these roles clearly defined, projects collapse under operational complexity.

Lacking cross-functional teams with clear separation between platform, product, and modeling responsibilities is a common reason why AI projects fail. It creates bottlenecks, technical debt, and slows iteration.

But many teams will ask: Where will the money for different AI roles come from? This is where nearshore AI teams provide a practical advantage, especially for North American companies.

ClickIT as a Nearshore AI Company

Nearshore teams operate in similar time zones, enabling real-time collaboration and faster iteration with lower operational costs. This makes experimentation less risky.

With ClickIT, your company can hire a fully experienced, cross-functional AI team within 3 to 5 days to automate processes, integrate AI APIs, develop generative AI solutions, predictive analytics, chatbots, or complete POCs and MVPs. As an Advanced AWS-certified partner, ClickIT provides cloud consulting, DevOps, and full-stack AI development.

By combining cross-functional expertise, proper infrastructure, and nearshore agility, your AI projects are far more likely to deliver real value.

Learn more about our AI Development Services.

What Successful AI Teams Do Differently to Avoid Failure (Technical Playbook)

The teams I’ve seen build successful AI projects always start with system design, not model selection.

- From a technical standpoint, successful teams define constraints early. SLAs are explicitly written down early and enforced. Latency, availability, and accuracy targets are also written down, enforced, and tracked.

- Cost ceilings are non-negotiable, with budgets set per feature or per action. Importantly, failure modes are documented so everyone understands what happens when a model is wrong, slow, or unavailable.

- From day one, these teams build evaluation-first pipelines. Every dataset, model, and integration is automatically tested against realistic production scenarios before it ships. This includes accuracy metrics as well as behavioral testing for edge cases, bias evaluation, and adversarial robustness testing.

- Observability is built in from the start. Token usage, cost per request, accuracy, and output quality are continuously tracked. This can help you detect degradation early instead of finding out when a user complains or when your business revenue is affected.

At the end of the day, you cannot fix what you cannot measure.

Most importantly, successful teams treat AI as a living system, not a one-time build. Models are expected to drift. Prompts will also evolve, and data distributions change.

So, retraining, monitoring, and iteration should be built into the architecture from the get-go.

This is how you build a successful AI project.