How to build a predictive AI Model? first define a clear business use case . Then, gather diverse, relevant patient data and clean it to ensure consistency. Develop and train the model using suitable algorithms like Random Forest, followed by validation with metrics such as precision and recall.

Once refined, deploy the model into real systems to deliver actionable outputs to clinicians. Finally, monitor its performance over time and retrain regularly to avoid model drift and keep predictions accurate.

A predictive AI model is a machine learning-based system that reads and analyzes historical data to predict future outcomes. It is used in various industries to predict business requirements and proactively act accordingly.

In this blog, I’ll guide you through the key steps and examples of how to build a predictive AI model, from problem definition to deployment.

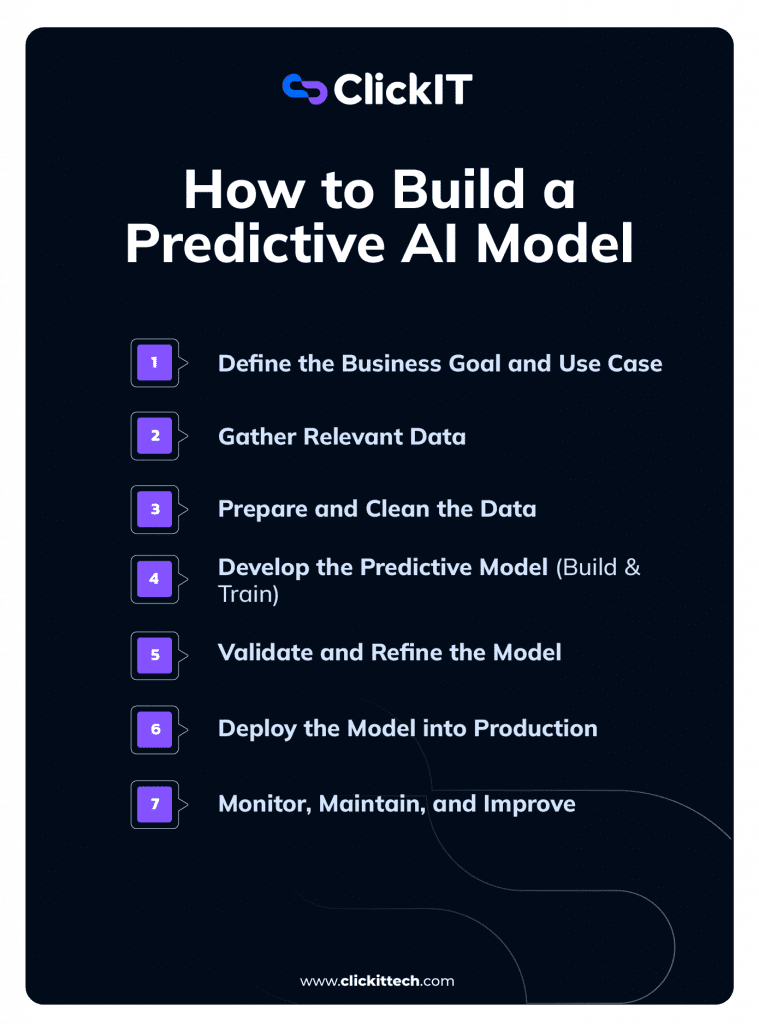

- 7 Steps on How to Build a Predictive AI Model

- Best Practices for Building a Predictive AI Model

- Frequently Asked Questions (FAQs)

Our predictive AI services help you extract insights, build models, and drive action.

📞 Talk to an AI Expert

7 Steps on How to Build a Predictive AI Model

- Define the Business Goal and Use Case

- Gather Relevant Data

- Prepare and Clean the Data

- Develop the predective model (Build & Train)

- Validate and Refine the model

- Deploy the model into Production

- Monitor, Maintain, and improve

Let me explain the process of how to build a predictive AI model using a model for the healthcare sector:

1. Define the Business Goal and Use Case

Before building a predictive AI model, it is essential to identify and define the business goal and specific use case. This approach ensures that the AI predictive model will solve a real problem. Moreover, we’ll have a measurable value.

For instance, a corporate hospital wants to proactively identify patients at high risk of developing diabetes in the coming five years. This allows doctors to intervene early with lifestyle recommendations and predictive care, preventing long-term healthcare costs and complications. This predictive AI model case focuses on leveraging patient data and predicting diabetes onset so that the hospital can prioritize preventive healthcare for high-risk patients with medical screening and lifestyle coaching.

Example of Defining a Goal and Use case for a Diabetes Predictive AI Model

| Key Objective | Predict diabetes onset within the next 5 years. |

| Binary Outcome | Yes / No |

| Target Users | Doctors, Hospital Administrators, and Care Coordinators |

| Success Metric | Reduce diabetes incidence by 20% among identified high-risk patients through early intervention. |

2. Gather Relevant Data

The quality and relevance of the data significantly impact the quality of predictions. So, collect diverse and representative data to ensure the model captures meaningful patterns. Data can be collected from structured sources like databases and APIs and unstructured sources like text, images, and logs.

In our use case, the diabetes prediction model collects data from various sources such as blood test results, patient demographics, lifestyle habits, and genetic factors. This information helps identify risk patterns based on past cases. For example, the hospital collects data from 50,000 patients over the past 10 years who developed diabetes and those who didn’t, to create a balanced dataset.

Example of Diabetes Predictive AI Model Potential Data Sources

| Electronic Health Records (EHRs) | Age, gender, BMI, family history of diabetes, blood pressure, glucose levels, cholesterol levels. |

| Lifestyle Data | Smoking status, diet habits, physical activity levels |

| Lifestyle Data source | Surveys and wearables |

| Lab Results | Fasting glucose tests, HbA1c levels |

| External Data | Diabetes Predictive AI Model: Potential Data Sources |

3. Prepare and Clean the Data

Raw data is incomplete primarily or inconsistent and needs cleaning and preparation. This involves removing duplicates, normalizing numerical features, encoding categorical variables, and handling missing values. Doing so will improve data accuracy and reliability.

Some testing labs record blood sugar levels using the mg/dL format, while others may use the mmol/dL format. We should standardize these units to ensure consistency across different data sources. Similarly, we should convert categorical data such as smoker/non-smoker into numerical values for model training.

Example of Diabetes Predictive AI Model Data Preparation

| Handle Missing Data | Assign missing BMI or glucose values using averages or predictive imputation (eg. based on age and gender). |

| Standardize Formats | Ensure units (eg. mg/dL for glucose) and categorical variables (eg. “smoker: yes/no”) are consistent. |

| Feature Engineering | Create new features such as ‘average glucose trend’ over time or ‘obesity flag (BMI > 30). |

| Remove Outliers | Exclude extreme values (eg. biologically implausible glucose readings) that could distort results. |

| Label the Data | Define the outcome variable. For example, patients diagnosed with diabetes within five years (1) vs. those who weren’t (0). |

After cleaning the data, the dataset might be reduced to 45,000 usable patients with 20 relevant features.

Our data analytics team turns raw data into insights that drive real business results.

👉 Unlock the power of your data

4. Develop the Predictive Model (Build & Train)

This step selects the appropriate machine learning algorithm, trains the model on historical data, and optimizes performance. The right algorithm can be chosen depending on the problem type, such as regression, anomaly detection, classification, etc.

The model uses historical patient data to classify patients into low-risk and high-risk diabetes categories. I would suggest the Random Forest algorithm here as it handles multiple health parameters effectively to provide high accuracy in medical predictions. Support Vector Machines (SVM) is another good algorithm for diabetes prediction.

Example of Diabetes Predictive AI Model Development

| Algorithm Choice | Random Forests Algorithm, Support Vector Machines (SVM), Gradient Boosting (XGBoost) |

| Split the Data | Divide the dataset into 70% training, 15% validation, and 15% testing sets. |

| Train the Model | Feed the training data into the algorithm, optimizing for features like BMI, glucose levels, and family history that strongly correlate with diabetes risk. |

| Hyperparameter Tuning | Adjust model settings (e.g. tree depth in random forest) to maximize predictive power. |

How to build an AI model? Here is an example code that uses Python and the Scikit-learn library for a predictive AI model.

Before writing the code, ensure that you have the necessary libraries installed.

To install the necessary libraries:

- pip install pandas scikit-learn

Here is the code:

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split, cross_val_score

from sklearn.preprocessing import StandardScaler

from sklearn.impute import SimpleImputer

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import roc_auc_score, precision_score, recall_score

import shap import joblib

# Step A: Define Business Goal and Use Case (in documentation above)

# Step B: Gather Relevant Data # Simulated dataset (in practice, this would come from EHRs)

def generate_sample_data(n_samples=45000):

np.random.seed(42)

data = {

'age': np.random.normal(45, 10, n_samples),

'bmi': np.random.normal(27, 5, n_samples),

'hba1c': np.random.normal(5.7, 0.5, n_samples),

'glucose': np.random.normal(95, 15, n_samples),

'family_history': np.random.choice([0, 1], n_samples, p=[0.7, 0.3]),

'smoking': np.random.choice([0, 1], n_samples, p=[0.8, 0.2]),

'diabetes_5yr': np.random.choice([0, 1], n_samples, p=[0.85, 0.15])

}

return pd.DataFrame(data)

# Step C: Prepare and Clean the Data

def prepare_data(df):

# Handle missing data

imputer = SimpleImputer(strategy='mean')

df[['bmi', 'hba1c', 'glucose']] = imputer.fit_transform(df[['bmi', 'hba1c', 'glucose']])

# Feature engineering

df['obesity_flag'] = (df['bmi'] > 30).astype(int)

df['high_glucose'] = (df['glucose'] > 100).astype(int)

# Remove outliers (simple rule: within 3 standard deviations)

for col in ['age', 'bmi', 'hba1c', 'glucose']:

df = df[np.abs(df[col] - df[col].mean()) <= (3 * df[col].std())]

return df

# Step D: Develop the Predictive Model

def build_model(X_train, y_train):

# Scale features

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train)

# Train Random Forest model

model = RandomForestClassifier(

n_estimators=100, # Number of trees

max_depth=10, # Limit depth to prevent overfitting

min_samples_split=5,

random_state=42,

n_jobs=-1 # Use all available cores

) model.fit(X_train_scaled, y_train)

return model, scaler

# Step E: Validate and Refine the Model

def evaluate_model(model, scaler, X_test, y_test):

X_test_scaled = scaler.transform(X_test)

y_pred_proba = model.predict_proba(X_test_scaled)[:, 1]

y_pred = model.predict(X_test_scaled)

auc = roc_auc_score(y_test, y_pred_proba)

precision = precision_score(y_test, y_pred)

recall = recall_score(y_test, y_pred)

print(f"AUC-ROC: {auc:.3f}")

print(f"Precision: {precision:.3f}")

print(f"Recall: {recall:.3f}")

# Cross-validation

cv_scores = cross_val_score(model, X_test_scaled, y_test, cv=5, scoring='roc_auc')

print(f"Cross-validation AUC-ROC: {cv_scores.mean():.3f} (±{cv_scores.std():.3f})")

return auc

# Step F: Deploy the Model into Production (simulated)

def predict_risk(model, scaler, new_patient_data):

new_data_scaled = scaler.transform(new_patient_data)

risk_score = model.predict_proba(new_data_scaled)[:, 1] * 100 # Convert to 0-100 scale

return risk_score

# Step G: Monitor, Maintain, and Improve (simulated monitoring)

def monitor_model_performance(model, scaler, X_monitor, y_monitor):

X_monitor_scaled = scaler.transform(X_monitor)

y_pred_proba = model.predict_proba(X_monitor_scaled)[:, 1]

auc = roc_auc_score(y_monitor, y_pred_proba)

print(f"Monitoring AUC-ROC: {auc:.3f}")

return auc

# Best Practices Implementation

def explain_predictions(model, X_test, feature_names):

# Model Transparency using SHAP

explainer = shap.TreeExplainer(model)

X_test_scaled = StandardScaler().fit_transform(X_test)

shap_values = explainer.shap_values(X_test_scaled) [1] # Get SHAP values for positive class

# Summary plot

shap.summary_plot(shap_values, X_test, feature_names=feature_names)

return shap_values

# Main execution

def main():

# Generate and prepare data

df = generate_sample_data()

df = prepare_data(df)

# Define features and target

features = ['age', 'bmi', 'hba1c', 'glucose', 'family_history', 'smoking', 'obesity_flag',

'high_glucose']

X = df[features]

y = df['diabetes_5yr']

# Split data

X_train, X_temp, y_train, y_temp = train_test_split(X, y, test_size=0.3, random_state=42)

X_val, X_test, y_val, y_test = train_test_split(X_temp, y_temp, test_size=0.5,

random_state=42)

# Build and train model

model, scaler = build_model(X_train, y_train)

# Evaluate model

auc = evaluate_model(model, scaler, X_test, y_test)

# Simulate deployment with a new patient

new_patient = pd.DataFrame({

'age': [45], 'bmi': [32], 'hba1c': [6.0], 'glucose': [105],

'family_history': [1], 'smoking': [0], 'obesity_flag': [1], 'high_glucose': [1]

})

risk_score = predict_risk(model, scaler, new_patient)

print(f"New patient risk score: {risk_score[0]:.1f}%")

# Explain predictions (transparency)

shap_values = explain_predictions(model, X_test, features)

# Save model for production (privacy consideration: ensure secure storage) joblib.dump(model, 'diabetes_risk_model_rf.pkl')

joblib.dump(scaler, 'scaler_rf.pkl')

# Simulate monitoring

monitor_model_performance(model, scaler, X_val, y_val)

if __name__ == "__main__":

main()We help businesses create predictive systems that reduce churn, optimize processes, and uncover new opportunities. Let’s build yours

5. Validate and Refine the Model

Model evaluation is important as it ensures the model performs well on unseen data. We can choose various machine learning approaches to assess the model’s performance like precision, recall, accuracy, and ROC-AUC. After evaluating the results, we can refine the model using hyperparameter tuning or feature engineering algorithms.

As we know, mispredicting diabetes can have serious consequences. So, optimize the model for high recall to detect as many potential diabetes cases as possible. Also, keep precision in check to avoid unnecessary panic from false positives.

In the above example code regarding how to build an AI model, I used the Random Forest algorithm, Precision, and High Recall to train and evaluate the model.

Example of Diabetes Predictive AI Model Validation

| Performance Evaluation | Precision and Recall are key metrics that balance false positives (unnecessary interventions) and false negatives (missed cases). |

| Cross-Validation | Perform k-fold cross-validation (eg. 5-fold) to confirm consistency across data subsets. |

| Refinement | Address overfitting by reducing model complexity or adding regularization if the model performs well on training data but poorly on validation data. |

| Hyperparameter Tuning | Adjust model settings (e.g., tree depth in random forest) to maximize predictive power. |

6. Deploy the Model into Production

Deployment options include on-premise servers, cloud-based APIs, or edge computing for real-time apps.

The diabetes prediction model is deployed within the hospital’s electronic health record system. It enables doctors to get instant risk assessments when patients visit the hospital. It also provides recommendations for further tests or preventive measures. For instance, a 45 year old patient with a BMI of 32 and HbA1c of 6.0% might receive a risk score of 75, prompting a referral to a diabetes prevention program.

Example of Diabetes Predictive AI Model Deployment

| Integration | Embed the model into the EHR system to flag high-risk patients during routine visits or annual checkups. |

| Output | Provide doctors with a risk score (e.g., 0–100) and a short explanation (eg. “High risk due to elevated HbA1c and family history”). |

| Automation | Set up a pipeline to process new patient data weekly and update predictions. |

7. Monitor, Maintain and Improve

Keep in mind that AI models must be continuously monitored so that they remain accurate over time. We should periodically retrain and update the model so that changes in data patterns will not lead to model drift.

As new patient records are added to the hospital database, the model must be retrained every six months to incorporate the latest trends and improve accuracy. We can also enhance the model by adding genetic data or real-time wearable inputs. If we notice a significant shift in patient demographics, we should integrate additional features in the model.

Example of Diabetes Predictive AI Model Monitoring

| Performance Monitoring | Track how well the model predictions align with actual diabetes diagnoses over time using a confusion matrix or drift detection. |

| Model Update | Retrain the model every six months with new patient data to account for changes in demographics or risk factors. |

| Feedback Loop | Incorporate doctor feedback (eg. “This patient was flagged but didn’t develop diabetes”) to improve accuracy. |

Best Practices for Building a Predictive AI Model

Following best practices ensures that our predictive AI model performs technically well and drives meaningful business impact. Let me share a few best practices here:

- Ensure Cross-Functional Collaboration

- Focus on Data Security and Privacy

- Model Transparency and Ethics

- Measure Business Impact, Not Just Accuracy

Ensure Cross-Functional Collaboration

The key to a predictive AI model’s success is aligning its capabilities with real-world needs. As such, we should involve all stakeholders across business and technical teams and build AI models collaboratively. This is important because we need technical expertise as well as domain expertise.

For instance, data scientists may excel at model development, but it is the domain experts who provide critical insights into industry-specific challenges. Similarly, business teams ensure that the model’s output aligns with decision-making goals. When these teams collaboratively build the AI model, success is guaranteed.

Focus on Data Security and Privacy

AI models often work with sensitive data. Models prepared for healthcare or fintech use sensitive patient/customer data, which is why robust security and privacy controls are essential. We should implement regulatory policies like HIPAA, PCI DSS, or GDPR depending on the industry. Failing to do so will result in legal issues and breaches and also cause reputational damage.

I strongly emphasize encryption for data storage and transmission and implement differential privacy techniques to protect individual identities. I also recommend adopting role-based access control to restrict exposure to sensitive data.

A hospital implementing a diabetes AI prediction model should anonymize patient data while ensuring data pipelines comply with HIPAA standards.

Model Transparency and Ethics

Transparent models build trust and help stakeholders understand how decisions are made. We should ensure that our model’s predictions are explainable, especially in high-stakes industries. Biased or unclear predictions can result in poor decision-making, regulatory issues, and reputational damage.

“A primary challenge is the need for clear model explainability. Investment managers must demonstrate a high degree of transparency when presenting insights to stakeholders and clients. Without explainability, AI-generated intelligence cannot be seamlessly absorbed into institutional decision-making.”, opines Giovanni Beliossi, Head of Investment Strategies at Axyon AI, in a blog post on Financial IT.

I recommend using explainable AI (XAI) techniques such as Local Interpretable Model-agnostic Explanations (LIME) or SHapely Addictive Explanations (SHAP) to provide insights into model decisions.

For our diabetes predictive AI model, the key risk factors, such as BMI or blood pressure, that influence its predictions should be clearly indicated and shown why a patient is flagged as high-risk. Avoid bias by auditing the model for disparities, like disproportionately flagging certain ethnic groups without clinical justification.

Measure Business Impact, Not Just Accuracy

Technical metrics like precision, recall, and accuracy are important but don’t always reflect real-world value. I have seen cases wherein high-accuracy models failed because they didn’t deliver measurable business outcomes. We should track metrics that align with our business objectives.

A healthcare predictive AI model should show improvements in early diagnosis rates and reduced hospital readmissions. So, measure how many high-risk patients received early interventions that improved health outcomes and saved costs. For instance, reducing diabetes incidence by 20% might save $5 million annually in treatment costs.

Teladoc Health reports that its predictive AI model increased engagement time among diabetes members by 3X and reduced A1C values by 0.4 (from 8.2 to 7.8).

If you are thinking about building a predictive AI model for your business, this is the right time to do so. Building a successful predictive AI model requires a structured approach. From defining a clear business goal to continuously monitoring and improving the model, we should ensure that it delivers accurate results and aligns with our business objectives.

By following best practices, we can maximize the value of AI-driven predictions. Ethical considerations, regulatory compliance, and real-world usability are important too. As AI continues to evolve, businesses that prioritize explainability, adaptability, and user trust are sure to leverage its potential fully.

Frequently Asked Questions

When you are thinking about how to build a predictive AI model, there is no fixed quantity for data collection. However, I suggest the more diverse and representative data you gather, the better. I have seen instances wherein a small, high-quality dataset with well-engineered features outperformed a large, messy dataset. In case real data is limited, use techniques like data augmentation, synthetic data generation, or transfer learning.

Not necessarily! Many platforms such as Google AutoML, Azure Machine Learning, and H2O.ai enable businesses to build AI models without deep technical expertise. However, when your project involves large-scale models or advanced use cases, working with data scientists and domain experts ensures better accuracy and reliability.

When you are exploring how to build an AI model, choosing the right algorithm depends on the problem type, data size, and interpretability needs. For instance, algorithms like Decision Trees and Logistic Regression work well for explainability needs, while Neural Networks are ideal for complex patterns in large datasets.