In today’s rapidly evolving AI space, the two most popular AI frameworks are growing strong, competing with each other. It’s LangChain 1.0 vs LangGraph 1.0.

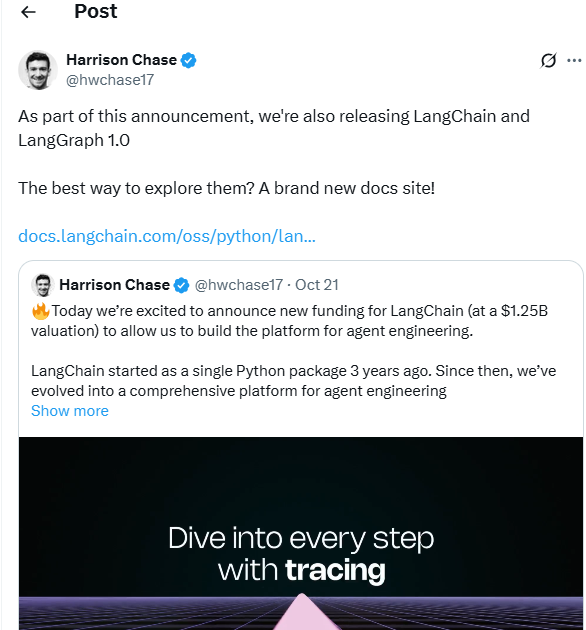

On 22nd October, 2025, LangChain announced on its blog that both LangChain and LangGraph Agent Frameworks have reached v1.0 milestones. This announcement has taken the LangChain vs LangGraph 1.0 debate to the next level.

According to Sequoia Capital, LangChain raised US$125 million in Series B funding and simultaneously announced v1.0 of LangChain, marking its transition from prototype to a production-class platform. The funding and business milestones imply that the ecosystem is scaling and gaining institutional support.

This blog post examines these new releases to unpack their features, highlighting key differences and providing practical guidance on when to choose one over the other.

- What is the Key Difference between LangChain 1.0 vs LangGraph 1.0?

- LangChain vs LangGraph 1.0: What’s New in LangChain 1.0?

- What is New in LangGraph 1.0?

- Tool Comparison Table: LangChain 1.0 vs langGraph 1.0

- Practical Use Cases to Use LangChain 1.0 vs LangGraph 1.0

- Use Cases Comparison Table: LangChain 1.0 vs. LangGraph 1.0

- Migrating from LangChain to LangGraph Tutorial

- FAQs

LangChain is a high-level framework for rapidly prototyping and deploying LLM-powered applications. It is designed with a focus on standardized abstractions for models, tools, and agents.

This approach allows developers to build complex LLM-powered apps without vendor lock-in. The new LangChain 1.0 release refines this vision in terms of modularity, performance, and production-level support.

LangGraph 1.0 is a low-level orchestration engine that is popular for durable, stateful agent workflows. The tool is designed to handle the complexities of production-grade, long-running agents efficiently.

It utilizes graph-based execution models instead of linear chains and features native capabilities such as streaming outputs, human-in-the-loop interventions, and support for data persistence. It enables AI agents to loop, branch, revisit states, and make dynamic decisions. LangGraph suits well for iterative reasoning, multi-agent systems, and long-running, stateful AI applications.

What is the Key Difference between LangChain 1.0 vs LangGraph 1.0?

Both frameworks enable developers to quickly build production-ready LLM-based apps but differ in core capabilities, design, levels of abstraction, and use cases.

LangChain 1.0 helps in quick and modular setups that involve linear pipelines for building basic chatbots or retrieval-augmented generation (RAG). On the other hand, LangGraph 1.0 suits non-linear, adaptive systems that require explicit state management and branching logic.

When it comes to LangChain 1.0 vs LangGraph 1.0 workflow design, LangChain builds on LCEL (LangChain Expression Language) for declarative chaining. LangGraph extends this with graph structures that support cycles, retries, and conditional edges.

Together, they make a great combination. LangChain provides high-level ergonomics, while LangGraph delivers low-level control, with LangChain’s new agents now running on LangGraph’s runtime in the background.

LangChain vs LangGraph 1.0: What’s New in LangChain 1.0?

LangChain 1.0 is a high-level agent framework that abstracts common patterns such as LLM calls, tool integration, memory, and control flow into reusable constructs.

It simplifies the development of agents and workflows by providing an create_agent() abstraction, middleware support, and standardized content blocks for interoperable outputs. It’s ideal when you want to build and ship agents quickly with minimal boilerplate.

Released in October 2025, the LangChain 1.0 version incorporates community feedback and introduces a refined, agent-centric architecture built on the LangGraph runtime. Focused on production readiness, it delivers simplicity, flexibility, and long-term stability with no planned breaking changes until 2.0.

a) New Features

- A major shift to central agent abstraction: LangChain 1.0 makes a major shift towards a unified agent object or pattern, built on top of LangGraph 1.0’s runtime.

Here is an example of invoking a weather agent using Python:

from langchain.agents import create_agent

from langchain.agents.structured_output import ToolStrategy

from pydantic import BaseModel

class Weather(BaseModel):

temperature: float

condition: str

def weather_tool(city: str) -> str:

"""Get the weather for a city."""

return f"It's sunny and 70 degrees in {city}"

agent = create_agent(

"openai:gpt-4o-mini",

tools=[weather_tool],

response_format=ToolStrategy(Weather)

)

result = agent.invoke({

"messages": [{"role": "user", "content": "What's the weather in SF?"}]

})

print(repr(result["structured_response"]))

# Output: Weather(temperature=70.0, condition='sunny')With structured outputs via Pydantic models, this ensures type-safe responses across providers.

- Standard content blocks for messages: LangChain 1.0 introduces standard content blocks for messages that allow structured data like reasoning traces, citations, tool calls, and multi-modal content like images, audio, and PDFs across LLM providers.

E.g.: Plain Text: message.content_blocks[0].text

Sources: message.content_blocks[1].citation

Earlier, it was fragmented by providers like OpenAI’s JSON mode and Anthropic’s XML tags. The new release offers a unified, provider-agnostic interface, enabling seamless model swapping without code rewrites.

- Simplified APIs on the JavaScript side: LangChain 1.0 simplifies API management. For instance, older patterns like createReactAgent are replaced with a createAgent API that streamlines agent creation.

- Streamlined package structure: The core LangChain package is trimmed for essential agent abstractions. The legacy functionality in JavaScript is moved to an “@langchain/classic” package.

- Improved integrations and backward-compatibility efforts: LangChain 1.0 features enhanced integrations and improved support for backward compatibility. For instance, it supports major providers via a standardized message format. Moreover, tools like content blocks work on legacy message types.

- Middleware and Standard Content Blocks: One of the most impactful additions in LangChain 1.0 is its middleware system, which lets developers inject behaviors such as summarization, human-in-the-loop approval, or PII redaction at defined points in the agent loop. Standard content blocks ensure consistent, structured outputs across models and integrations, enabling seamless model swaps without breaking workflows

b) Design and Development Approach

Checking the design and development approach is a key aspect in the LangChain vs LangGraph 1.0 release. Considering community feedback, LangChain 1.0 made a shift from “many chain or agent patterns” to fewer and more opinionated abstractions. This approach guides developers toward production readiness rather than prototype mode.

- The team identified pain points, such as heavy abstractions and an overly broad API surface, and streamlined the framework by stripping them away. The result is a tighter namespace focused on core agent components rather than exhaustive primitives.

- The older utilities are moved to langchain-classic. The new release focuses on tool-loop execution, model neutrality, and extensible middleware.

- When it comes to development, the emphasis is on enterprise use. The new release focuses on stability, standardisation across LLM providers, scalable agents, and multi-modal workflows.

- The versioning and release policy indicate that major version 1.0 may include breaking changes and architectural improvements, with migration guides provided.

- LangChain 1.0 leverages LangGraph’s runtime to offer branching, memory-enabled, durable agent workflows with over 100 plug-and-play integrations through standardized abstractions.

Rooted in LCEL’s declarative principles, the new version enhances production readiness with middleware, content blocks, built-in security, observability via OpenTelemetry, and real-world feedback from adopters like Uber and Cisco. It positions LangChain as focused yet versatile for agent development.

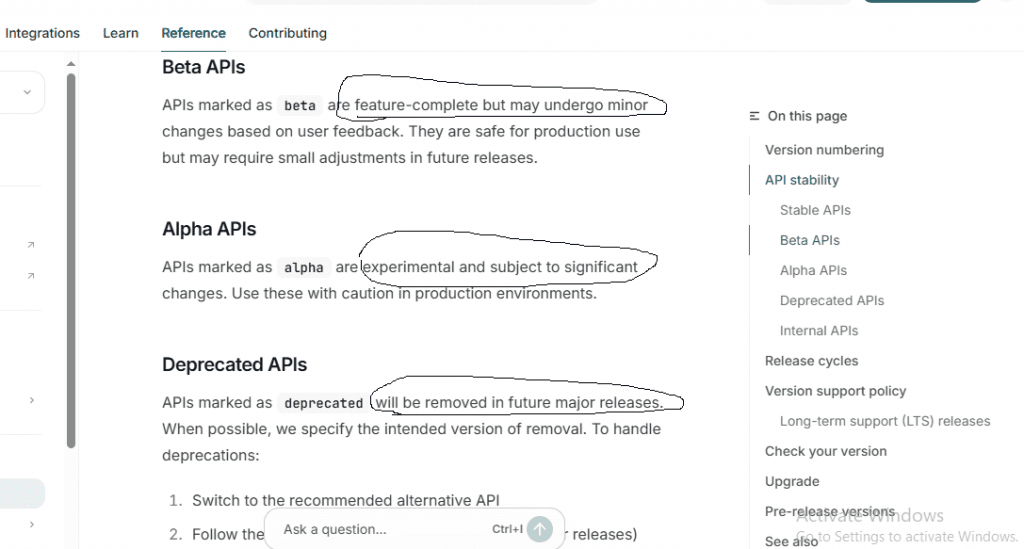

c) New Documentation

A major update of LangChain 1.0 is its redesigned documentation hub that tries to resolve years of feedback about scattered guides. For the first time, it brings Python and JavaScript content under one unified experience.

Released in tandem with the update, the new site offers clearer navigation, side-by-side code samples, and comprehensive tutorials for architectures like ReAct and multi-tool agents. There is a dedicated migration guide for v1 to help users move from earlier versions to the new abstractions.

Key improvements include:

- Conceptual Guides offering clear explanations of agents, chains, and middleware, supported by decision trees to help developers choose the right pattern.

- Hands-on Tutorials, such as building a RAG agent using create_agent and implementing custom middleware step-by-step.

- API Reference pages that are unified, fully searchable, and aligned across TypeScript and Python.

- Migration Guidance that walks users through transitioning from pre-1.0 versions, including how to replace deprecated components via langchain-classic.

Community contributions are encouraged via GitHub, ensuring ongoing relevance.

d) Learning Curve

APIs are now more streamlined, with standardized agent abstraction and a simplified createAgent API in JavaScript, among other enhancements. As such, newcomers can benefit from more consistent workflows.

On the downside, if someone is upgrading from older v0.x versions, there may be some breaking changes or different abstractions to learn. Especially when moving to the new agent model, message content blocks, or migrating from older chains/agent patterns, there is a bit of a learning curve.

Moreover, v1 docs for Python are marked “Alpha – content incomplete and subject to change” as of now. That may add some confusion for early adopters.

e) People’s Opinion

The LangChain 1.0 update has earned accolades from developers praising its maturity after years of rapid iteration. Most of the comments on the LangChain forum are positive.

Here’s how the LangChain team responded:

- Harrison Chase (@hwchase17) from LangChain highlighted the “brand new docs” as a game-changer, echoing community calls for better resources on X.

Brace Sproul (@BraceSproul), who leads Applied AI at LangChain, responded to Harrison’s post on X, calling the docs incredible and noting that they have addressed a top pain point since LangChain’s inception.

- Julia Schottenstein (@j_schottenstein) emphasized the ground-up rebuild for flexibility, admitting it was a tough call but essential for power users.

- Harry Zhang (@zhanghaili0610) shared hands-on alpha experience: “game-changing dev UX” with middleware shining for structured outputs.

- An X user, Victor (@victor_explore), lauded its role in shaping AI agent engineering, linking to breakdowns of middleware and workflows.

- Another X user (@kuwa_tw) shared: “Trying LangChain & LangGraph 1.0—fun!” capturing the buzz.

- On Reddit, users hail prompt chaining for complex workflows as a mature leap forward, with docs and agents as MVPs.

- A user Tik1993 reacted on LangChain Community Forum – “I’m excited for v1 and to see how it applies to my application!”

There were a few negative comments as well:

- A user @un1tz3r0 says “questionably necessary complexity” in abstractions, suggesting over-engineering.

- Chinese developer @AIBTCAI noted that there is a steep curve for beginners, likening upgrades to Python 2-to-3 shifts, though praising stability.

What is New in LangGraph 1.0?

LangGraph 1.0 is a graph-based agent runtime that provides durable execution, state management, branching, event logs, and fine-grained control for complex workflows.

It’s designed to orchestrate long-running agentic systems, persistent state across steps, and custom control logic in production environments. Many LangChain abstractions are now built on top of LangGraph, enabling interoperability.

At its core, LangGraph exposes a graph-based runtime abstraction for custom control flow, branching logic, retries, and multi-agent coordination. LangGraph 1.0 offers granular control over execution graphs by blending deterministic logic with agentic steps for optimized latency, cost, and oversight.

a) New Features

- LangGraph 1.0 extends its graph-driven architecture, modeling workflows through nodes (actions), edges (transitions), and shared state. This approach provides enterprise-level reliability and resilience while keeping implementation simple and intuitive.

- A key addition is durable state management. It automatically checkpoints each execution step to configurable backends such as in-memory stores, SQLite, or PostgreSQL. As such, workflows can resume seamlessly after crashes, restarts, or disconnections. This makes it perfect for extended, asynchronous processes like multi-day approvals.

Here is an example code for invoking a simple persistent graph:

from langgraph.graph import StateGraph, END

from langgraph.checkpoint.sqlite import SqliteSaver

from typing import TypedDict, Annotated

import operator

class State(TypedDict):

messages: Annotated[list, operator.add]

count: int

def increment(state: State) -> State:

return {"count": state["count"] + 1}

workflow = StateGraph(State)

workflow.add_node("increment", increment)

workflow.add_edge("increment", END)

workflow.set_entry_point("increment")

memory = SqliteSaver.from_conn_string(":memory:")

app = workflow.compile(checkpointer=memory)

config = {"configurable": {"thread_id": "abc123"}}

result1 = app.invoke({"count": 0}, config)

result2 = app.invoke({"count": 0}, config) # Resumes from checkpoint

print(result2["count"]) # Outputs: 2- LangGraph also provides comprehensive memory handling for both short-term working memory and persistent long-term state, making it well-suited for stateful, multi-session agents.

- LangGraph integrates seamlessly with LangChain 1.0, offering the balance of high-level ergonomics and low-level control. LangChain’s new agent system now runs directly on the LangGraph runtime. It means LangChain 1.0 vs LangGraph 1.0 is now LangChain for LangGraph.

- Durable Runtime & Agent Orchestration: LangGraph 1.0 focuses on durable agent runtime: persistent memory, robust branching, retries, checkpoints, and reliable state across task boundaries. This makes it a natural foundation for production-ready agents, particularly when workflows require complex decision logic, looping, or long-running processes

b) Design and Development

LangGraph 1.0 design was driven by feedback that existing agent frameworks were easy to start but hard to scale or customize. With LangChain 1.0’s agents powering the runtime, you can start at a high level and drill down for customization without rewrite costs.

The team prioritized production-readiness over just ease of use. The runtime prioritizes controllability, allowing fine-tuning of parallelism, caching, and error boundaries for cost and latency optimization in production.

The runtime design separates the developer SDK/API from the execution engine. This allows the dev interface and runtime to evolve independently. Dropping Python 3.9 support (EOL) and adding 3.14 compatibility reflects a forward-looking stance, with async-first APIs for scalability.

The new release enables you to mix structured steps like API calls and probabilistic steps like LLM decisions with edges to enforce determinism where needed. For instance, it retries on tool failures or branches on confidence scores.

This approach aligns with observability mandates. For instance, every node traces to LangSmith, which means debuggability at scale is ensured.

Security features like configurable auth for HITL, and extensibility via custom reducers make LangGraph a good choice for agent fleets and not just for a single bot.

c) New Documentation

The 1.0 documentation at docs.langchain.com consolidates Python and JavaScript resources for LangGraph and LangChain alike. Resolving prior concerns of content fragmentation, the new documentation offers side-by-side code samples, searchable API refs, and conceptual primers on graph theory in agents.

The new documentation improves developer experience with:

- Interactive Tutorials: Practical, notebook-based walkthroughs covering everything from simple state graphs to advanced, human-in-the-loop (HITL) multi-agent workflows.

- Conceptual Guides: Clear comparison frameworks such as “Graphs vs. Chains” and detailed migration steps for users upgrading from pre-1.0 versions.

- Comprehensive API References: Automatically generated docs enriched with type annotations, sample snippets, and clear deprecation notes.

- Community Engagement Hub: Streamlined GitHub issue templates tagged for v1 feedback and direct links to discussion forums for peer collaboration.

The docs note that LangGraph is focused on low-level orchestration. If you are just beginning with agents, you might be better off using LangChain’s higher-level APIs.

d) Learning Curve

LangGraph is low-level and extensible, which means there is a steep learning curve. It offers greater control but also requires more understanding as you need to design graphs, nodes, edges, and state flows manually rather than rely on ready-made agent templates.

For teams familiar with simpler agent frameworks, this means extra effort in understanding graph-based orchestration, state management, and deployment patterns. However, this extra learning effort in understanding complex workflows, loops, human oversight, and long-running agents will pay off significantly.

e) People’s Opinion

Many users are excited about the LangChain 1.0 release. Production-readiness, improved documentation, and refined architecture are the key aspects that impressed users. Especially for developers, stability in production is a game-changer.

On the downside, some users still want clarity on migration paths, legacy support, learning curves, and how the new abstractions will behave in real-world systems, especially for complex workflows.

- An AI developer Sean_Dev, posted on X, praising the new release as massive for production AI.

“LangGraph 1.0 stable is massive for production AI, the shift from “magic orchestration” to “explicit state graphs” is finally here, no more black box agent loops breaking mysteriously, if you can’t draw your agent’s state transitions, you can’t debug it.”

- Matt Dancho (@mdancho84) slotted it into his $12/year AI stack, emphasizing accessibility for $200K careers

- Another user, @HunterRockCat, hoped for fewer cliff-like breaks, referencing AutoGen woes.

- Rippling’s Ankur Bhatt lauded the durable runtime and middleware flexibility in production.

Tool Comparison Table: LangChaing 1.0 vs LangGraph 1.0

| Criteria | LangChain 1.0 | LangGraph 1.0 |

| Primary Focus | High-level agent framework for rapid prototyping and production-ready LLM apps | Low-level orchestration engine for durable, stateful, and complex agent workflows |

| Architecture | Declarative chaining via LCEL (LangChain Expression Language) | Graph-based runtime built on nodes, edges, and persistent state |

| Execution Control | Abstracted control, ideal for simplicity | Fine-grained control for custom branching, retries, and checkpoints |

| Durability | Ephemeral (short-lived) sessions | Persistent state and resumable execution across sessions |

| Integration Level | Plug-and-play integrations (100+ models and APIs) | Integrates deeply with LangChain 1.0 runtime for graph execution |

| Learning Curve | Lower, suitable for beginners and rapid iteration | Moderate to high, requires understanding of state graphs and runtime logic |

| Human-in-the-Loop (HITL) | Supported via higher-level wrappers | Native support for pausing/resuming with manual validation |

| Best For | Developers building quick prototypes, chatbots, RAG pipelines, or tool-augmented agents | Teams deploying long-running, multi-agent, or human-in-the-loop systems |

| When to Choose | Build fast and iterate often | Resilient, production-grade agent orchestration |

Practical Use Cases to Use LangChain 1.0 vs LangGraph 1.0

As LangChain 1.0 and LangGraph 1.0 mature into stable and production-ready frameworks, the next question is when to use LangChain 1.0 vs LangGraph 1.0.

LangChain 1.0 excels in high-level, declarative abstractions for quick iteration on standard LLM patterns. On the other hand, LangGraph 1.0 provides low-level graph orchestration for workflows that demand explicit control, persistence, and branching.

The real-world applications of both tools differ in how much control a developer needs over an agent’s workflow.

When to Use LangChain 1.0?

LangChain 1.0 is best suited for teams that require rapid agent development with minimal configurational setup. It provides high-level abstractions and ready-to-use components that simplify tasks.

A few examples:

- Conversational Agents: Chatbots that integrate LLMs like GPT or Claude with external tools for retrieval, summarization, or question answering.

- Retrieval-Augmented Generation (RAG) Pipelines: Easily combine document loaders, vector stores, and retrievers using the create_retrieval_chain API.

- Customer Support Automation: Build multi-turn dialogue systems that can reason over structured and unstructured data sources.

- Prototyping and Demos: Quickly experiment with new ideas using LCEL (LangChain Expression Language) for declarative chaining.

LangChain 1.0 abstracts most of the runtime mechanics, which means you can focus on logic, prompts, and user experience rather than orchestration details.

Use Case Example: LangChain 1.0 for Customer Support Automation

Here is a use case example for LangChain 1.0 that streamlines support bots that query knowledge bases and tools without custom state management. It retrieves docs, calls APIs for order status, and responds with structured advice.

Just write around 20 lines of code, leveraging create_agent and middleware for conversation history and you are good!

from langchain.agents import create_agent

from langchain.agents.structured_output import ToolStrategy

from langchain_core.tools import tool

from pydantic import BaseModel

from langchain_openai import ChatOpenAI

class SupportResponse(BaseModel):

resolution: str

next_steps: list[str]

@tool

def check_order(order_id: str) -> str:

"""Check order status."""

return f"Order {order_id} shipped on Nov 5."

@tool

def search_kb(query: str) -> str:

"""Search knowledge base."""

return "For shipping delays, contact [email protected]."

llm = ChatOpenAI(model="gpt-4o-mini")

agent = create_agent(

llm,

tools=[check_order, search_kb],

response_format=ToolStrategy(SupportResponse)

)

# Middleware for summarization (prebuilt)

from langchain.agents.middleware import ConversationSummaryMiddleware

agent.add_middleware(ConversationSummaryMiddleware(llm))

result = agent.invoke({

"messages": [{"role": "user", "content": "My order #123 is delayed."}]

})

print(result["structured_response"])

# Output: SupportResponse(resolution='Shipped Nov 5; minor delay expected.', next_steps=['Track via link', 'Email support if needed'])When to Use LangGraph 1.0?

LangGraph 1.0 is designed for developers who require precise control over agent orchestration and resilience.

A few examples:

- Long-running or Stateful Agents: Workflows that span hours or days, such as multi-stage approvals or research pipelines.

- Human-in-the-Loop (HITL) Systems: Nodes can pause for manual validation before resuming automatically.

- Complex Multi-Agent Collaboration: Define agents as interconnected graph nodes that communicate asynchronously.

- Durable Execution: Resume tasks after crashes or interruptions through built-in checkpointing and state persistence.

Use Case Example: Multi-Step Approval Workflows

LangGraph 1.0 best suits workflows wherein the state must persist across interruptions.

For instance, consider an expense reimbursement graph. Here, the system should route claims to managers, pause for approval, and retry on rejections using checkpoints for durability.

Here is the code in Python:

from langgraph.graph import StateGraph, END

from langgraph.checkpoint.sqlite import SqliteSaver

from typing import TypedDict, Annotated

import operator

from langchain_openai import ChatOpenAI

class State(TypedDict):

claim_amount: float

messages: Annotated[list, operator.add]

approved: bool

def review_claim(state: State) -> State:

llm = ChatOpenAI(model="gpt-4o-mini")

response = llm.invoke(state["messages"] + [{"role": "system", "content": f"Approve if under ${state['claim_amount'] < 1000}."}])

state["approved"] = "approve" in response.content.lower()

state["messages"].append({"role": "assistant", "content": response.content})

return state

def human_approval(state: State) -> str:

if state["approved"]:

return "final_approve"

else:

return "human_review" # Pause for HITL

workflow = StateGraph(State)

workflow.add_node("review", review_claim)

workflow.add_conditional_edges("review", human_approval, {"final_approve": END, "human_review": END})

workflow.set_entry_point("review")

memory = SqliteSaver.from_conn_string(":memory:")

app = workflow.compile(checkpointer=memory)

config = {"configurable": {"thread_id": "claim_456"}}

result = app.invoke({"claim_amount": 1500, "messages": [], "approved": False}, config)

print(result["approved"]) # False; pauses for human input, resumes on next invokeTo summarize LangChain 1.0 vs LangGraph 1.0, LangChain 1.0 focuses on simplicity and speed, whereas LangGraph 1.0 delivers precision and durability. By combining both tools, we can create a unified framework that helps in building powerful production-ready LLM-based applications, right from rapid prototyping to enterprise-grade orchestration.

Use Cases Comparison Table: LangChain 1.0 vs. LangGraph 1.0

| Tasks | Use Cases (LangChain 1.0) | Use Cases (Langraph 1.0) | Key Considerations (LangChain 1.0) | Key Considerations (LangGraph 1.0) |

| Rapid Prototyping | Building chatbots, Q&A bots, or simple RAG systems for internal tools | Less ideal; better for post-prototype refinement. | Faster setup (5-10 lines) | More upfront modeling |

| Customer Support | Automated ticketing with tool-calling agents (e.g., query CRM, generate responses). Middleware for summarization | Multi-step approval workflows (e.g., escalate to human for high-value refunds) | Handles 1-3 steps natively | Adds persistence for long threads |

| Document Analysis | Summarizing reports or extracting insights via chains with structured outputs | Iterative refinement graphs (e.g., branch on confidence, loop for clarifications) | Unified content blocks for quick parsing | Cycles for accuracy |

| Multi-Agent Systems | Basic collaboration (e.g., researcher + writer agent) | Complex orchestration (e.g., sales pipeline with lead gen, negotiation, and closing agents) | Prebuilt patterns | Custom edges for routing |

| Production Scaling | Observability via LangSmith integration for standard agents | Durable execution with checkpoints for async, interruptible apps (eg: 24/7 monitoring) | Easy middleware for guards | HITL for compliance |

| Complexity Level | Low-to-medium: Linear or mildly branched flows | High: Non-linear, stateful, or sensitive (eg: financial approvals). | Use LangChain for entry, | Use LangGraph for bottlenecks. |

Migrating from LangChain to LangGraph Tutorial

It is not always about LangChain 1.0 vs LangGraph 1.0. As LangChain 1.0 adapts LangGraph as its underlying runtime for agents, many developers are eyeing a full migration to leverage LangGraph’s explicit graph-based control for legacy LangChain code. However, it is important to note that this is not always necessary.

LangChain 1.0’s create_agent already leverages LangGraph transparently. However, for custom workflows that require cycles, persistence, or multi-actor orchestration, LangGraph is a great option.

Before making a migration from LangChain to LangGraph, it is important to understand the architectural shift.

In the previous LangChain versions, execution was linear and chain-based. LangGraph replaces this with a graph model, wherein each node represents an action or decision and edges define transitions. The state persists across steps.

| Architecture Design | LangChain (Pre 1.0 version) | LangGraph 1.0 |

| Execution Model | Sequential Chains | Graph of nodes and edges |

| Memory | In-session only | Durable and persistent |

| Error Handling | Manual retries | Built-in Checkpoint and resumes |

| Control Flow | Limited Branching | Conditional and Cyclic Flows |

Here is a tutorial that walks you through the migration process, from simple chains to stateful graphs:

Step 1: Prerequisites and Setup

For this example, we’ll use Python. You should have API keys for OpenAI.

- Install Dependencies

- Bash command:

pip install langchain langgraph langchain-openai langsmith

- Set your OpenAI key:

export OPENAI_API_KEY=your_key_here

This is optional:

- LangSmith for Debugging:

- sign up at smith.langchain.com

- set LANGCHAIN_TRACING_V2=true

- LANGCHAIN_API_KEY=your_key

Step 2: Migrate Simple Chains to Basic Graphs

Legacy LangChain chains like LCEL prompts and models are great for linear flows but lack state persistence. Migrating to a StateGraph means shared data across steps.

Before (Legacy LangChain Chain):

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

prompt = ChatPromptTemplate.from_template("Summarize the following: {text}")

model = ChatOpenAI(model="gpt-4o-mini")

chain = prompt | model

result = chain.invoke({"text": "LangChain is a framework for LLM apps."})

print(result.content) # Outputs: A concise summary.

After (LangGraph StateGraph):

Here is the code in Python:

from typing import TypedDict

from langgraph.graph import StateGraph, END

from langchain_openai import ChatOpenAI

class State(TypedDict):

text: str

summary: str

def summarize(state: State) -> State:

model = ChatOpenAI(model="gpt-4o-mini")

prompt = f"Summarize the following: {state['text']}"

response = model.invoke(prompt)

return {"summary": response.content}

workflow = StateGraph(State)

workflow.add_node("summarize", summarize)

workflow.set_entry_point("summarize")

workflow.add_edge("summarize", END)

app = workflow.compile()

result = app.invoke({"text": "LangChain is a framework for LLM apps."})

print(result["summary"]) # Same output, but now stateful.

Key changes to note:

- Defining a State schema like TypedDict for inputs/outputs for enforcing type safety.

- Wrapping logic in node functions that update state immutably.

- Compiling and invoking: Identical API to chains, but extensible.

To test this step, run both the codes and check if the outputs match.

Step 3: Migrate Agents to Tool-Equipped Graphs

Agents loop over tools and decisions but are black-box. LangGraph exposes this as explicit nodes or edges for retries and branching.

Before Legacy LangChain Agent:

from langchain.agents import create_react_agent, AgentExecutor

from langchain_openai import ChatOpenAI

from langchain import hub

from langchain_core.tools import tool

@tool

def get_weather(city: str) -> str:

"""Get weather for a city."""

return f"It's sunny in {city}!"

llm = ChatOpenAI(model="gpt-4o-mini")

prompt = hub.pull("hwchase17/react")

agent = create_react_agent(llm, [get_weather], prompt)

agent_executor = AgentExecutor(agent=agent, tools=[get_weather], verbose=True)

result = agent_executor.invoke({"input": "What's the weather in Paris?"})

print(result["output"])This handles tool calls but lacks persistence across sessions.

After (LangGraph Agent Graph):

Key changes to note:

- Use create_react_agent from LangGraph or custom nodes for the agent/ tool calls.

- Invoke with messages list and config for thread-aware state.

- Add a checkpointer like MemorySaver for conversation history and migrate from deprecated ConversationBufferMemory.

Here is the Python code:

from langgraph.prebuilt import create_react_agent # Or build custom

from langchain_openai import ChatOpenAI

from langchain_core.tools import tool

from langgraph.checkpoint.memory import MemorySaver # For basic state

@tool

def get_weather(city: str) -> str:

"""Get weather for a city."""

return f"It's sunny in {city}!"

llm = ChatOpenAI(model="gpt-4o-mini")

tools = [get_weather]

memory = MemorySaver() # Short-term memory

agent_executor = create_react_agent(llm, tools, checkpointer=memory)

config = {"configurable": {"thread_id": "abc123"}}

result = agent_executor.invoke(

{"messages": [("user", "What's the weather in Paris?")]},

config

)

print(result["messages"][-1].content) # Outputs weather response.Step 4: Add Persistence and Memory

Legacy memory is brittle but LangGraph’s checkpointers persist state durably.

Enhance the agent example by replacing MemorySaver with SqliteSaver for production:

from langgraph.checkpoint.sqlite import SqliteSaver

memory = SqliteSaver.from_conn_string("checkpoints.db")

agent_executor = create_react_agent(llm, tools, checkpointer=memory)

# Second invocation resumes state

result2 = agent_executor.invoke(

{"messages": [("user", "And in London?")]},

config # Same thread_id

)This step saves checkpoints to DB to enable resume after restarts. This is especially helpful for long-running apps

Step 5: Incorporate Human-in-the-Loop (HITL)

For approval workflows, add conditional edges to pause.

Here is an example extension on the agent graph:

from langgraph.graph import StateGraph # For custom

# In a custom graph...

def route_decision(state):

if "high_risk" in state["messages"][-1].content:

return "human_review"

return "continue"

workflow.add_conditional_edges("agent", route_decision, {"human_review": "human_node", "continue": END})

# In human_node: Await input via API/UI, then resume.

This code migrates implicit loops to explicit pauses, ensuring that errors in sensitive tasks are reduced.

Test iteratively. Experiment in a sandbox. Your first might take some hours, but it will repay high dividends in terms of scalability.

FAQs

No. LangGraph is not a replacement but a runtime extension of LangChain. LangChain 1.0 still provides high-level abstractions for building agents while LangGraph 1.0 handles low-level orchestration for managing execution graphs, durability, and control flow in the background.

Not necessarily! Many existing LangChain workflows continue to work without changes. However, if your project requires state persistence, long-running tasks, or branching logic, it is recommended to migrate specific components to LangGraph. This will significantly enhance performance and reliability.

If you are new to AI agent development, start with LangChain 1.0. It provides simpler abstractions and helps you easily understand how LLMs interact with tools, memory, and APIs. Later, you can move to LangGraph 1.0 for deeper control, scalability, and custom orchestration.

LangChain 1.0 is the best choice for most AI-powered chatbots in 2026 because it enables faster development, flexible conversational flows, and easy integration with models, memory, and tools.

LangGraph 1.0 is better suited for complex, production-grade chatbots that require persistent state, advanced orchestration, and long-running workflows.