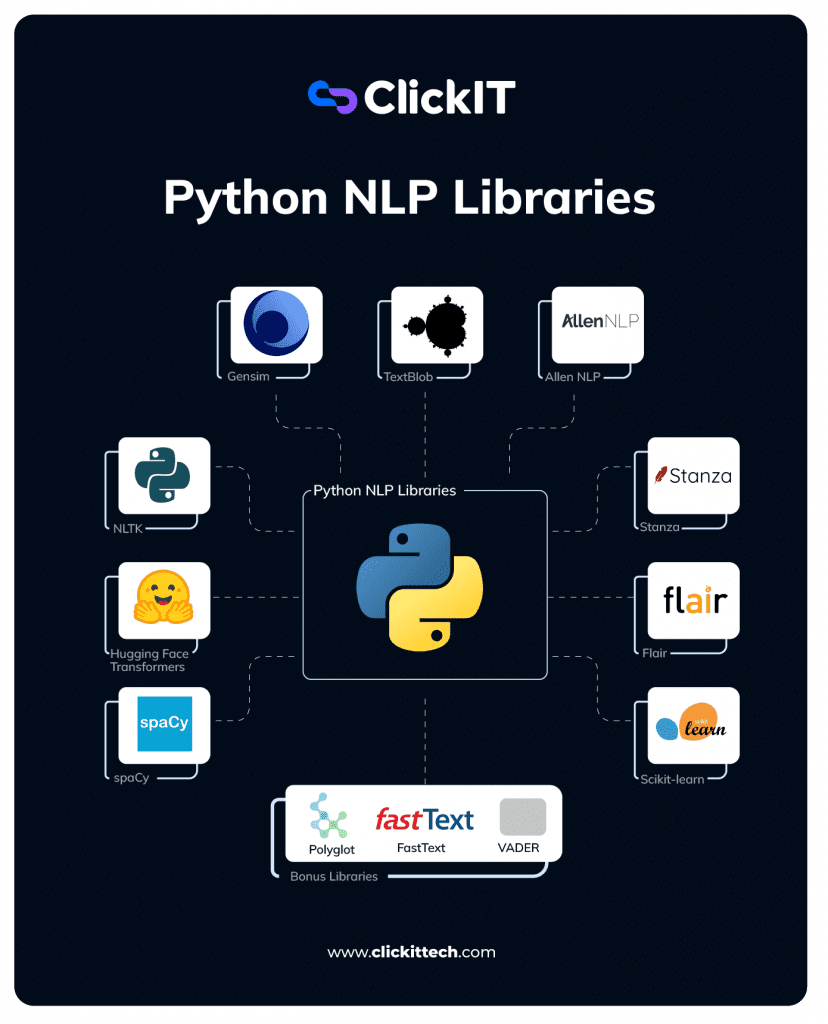

Natural Language Processing (NLP) has become central to modern AI applications as it enables machines to understand, interpret, and generate human language. Python NLP libraries are at the heart of this revolution.

Natural language processing in Python is the most popular approach because Python offers simple syntax, a vast ecosystem, and a rich collection of specialized tools.

Developers and data scientists utilize NLP libraries in Python for sentiment analysis, text interpretation, language translation, tokenization, and numerous other tasks. They have the luxury of choosing from NLP libraries in Python, such as NLTK, spaCy, Transformers by Hugging Face, etc., to build powerful language-based applications.

In this guide, I will explore the best NLP libraries available today and their strengths to help you decide the best fit for your project.

- Python NLP Libraries Comparison Table

- What is the Difference Between spaCy vs NLTK vs Hugging Face Transformers?

- Final Verdict: How to Choose the Right Python NLP Library for Your Project?

- FAQs About Python NLP Libraries

Python NLP Libraries Comparison Table

| Library | Best for | Speed | Deep Learning | Multilingual | Learning Curve |

| spaCy | Production & Speed (NER, POS) | Fastest | Integrated | 70+ Languages | Low |

| Hugging Face Transformers | SOTA Models (LLMs, Research) | Resource-Intensive | Excellent | Vast Model Hub | High |

| NLTK | Learning & Academia | Slow | None | Good | Very Low |

| Gensim | Topic Modeling (Word Embeddings) | Fast (Scalable) | No | Language Agnostic | Medium |

| TextBlob | Quick Prototyping (Simple Sentiment) | Medium | No | Limited | Very Low |

| AllenNLP | Advanced Research (Q&A, Coreference) | Medium | Excellent | Limited | High |

| Stanza | High-Accuracy Multilingual | Medium | Built-in | 70+ Languages | Medium |

| Flair | High-Performance NER/Classification | Medium | Built-in | Good | Medium |

| scikit-learn | Traditional Classification (ML) | Fast | None | Poor | Low |

| OpenNMT-py | Machine Translation (NMT) | High (GPU Optimized) | Excellent | Dedicated | High |

1) spaCy

spaCy is one of the most popular NLP libraries Python developers turn to when the need is for production-ready, high-performing natural language processing. Speed, accuracy, and seamless integration are three aspects in which spaCy excels.

It offers a well-structured pipeline that makes it easy to process large volumes of text with minimal overhead.

What are the advantages of spaCy?

spaCy’s architecture is optimized for real-world NLP tasks with fast tokenization and efficient memory usage. spaCy is significantly faster than many other Python NLP Libraries, which makes it ideal for real-time applications. Its modular and extensible pipelines allow for easy integration of custom components.

It integrates seamlessly with machine learning frameworks such as TensorFlow, scikit-learn, and PyTorch. spaCy offers pre-trained models for multiple languages that offer high accuracy in NER and POS tagging.

Read or watch a video about how to choose between TensorFlow vs PyTorch

Pros and Cons of spaCy?

Pros

- Clean and intuitive API design

- Exceptional speed and performance for large-scale NLP tasks

- Easy integration with deep learning models

Cons

- Limited multilingual model coverage out of the box

- Requires external packages for wider language support

- Smaller community compared to older libraries like NLTK

spaCy is best suited for developers looking for a fast, reliable, and production-ready solution for NLP libraries Python tasks such as Named Entity Recognition (NER), part-of-speech (POS) Tagging, and Dependency Parsing.

2) Hugging Face Transformers

The Hugging Face Transformers revolutionized natural language processing in Python by making transformer-based models easily accessible. It offers state-of-the-art architectures (SOTA) along with 20,000+ pre-trained models via the Hugging Face Hub.

The extensive model hub and ease of use make Hugging Face Transformers a go-to choice for advanced NLP tasks.

What are the advantages of Hugging Face transformers?

Hugging Face Transformers provides access to cutting-edge SOTA models such as BERT, GPT-5, T5, RoBERTa, DistilBERT, and many more. In addition, it offers pre-trained models in multiple languages and domains and is backed by an active and fast-growing open-source community.

“Hugging Face now supports 300+ model architectures with an average of 3 new architectures added every week” – Hugging Face Blog

Pros and Cons of Hugging Face Transformers?

Pros

- Easy to use

- Easy access to high-performing pre-trained models

- Strong ecosystem with tools like Datasets and Tokenizers

- Excellent documentation and community support

Cons

- Learning curve for fine-tuning

- Resource-intensive, which is a barrier for users with limited hardware

Hugging Face Transformers is a good choice for developers and researchers working on advanced NLP libraries Python tasks, especially when leveraging SOTA models for Q&A, classification, and summarization.

Scale your AI team with LATAM NLP and ML experts. Book a Call

3) NLTK

The Natural Language Toolkit (NLTK) is one of the earliest and popularly used NLP libraries Python developers and researchers rely on. This classic, open-source library offers a comprehensive suite for text processing that makes it easy for teaching and research tasks.

What are the advantages of NLTK?

NLTK is popularly known as the Classic Academic Toolkit as it comprises a rich collection of corpora, lexical resources, and over 50 different modules for linguistic processing.

The deep documentation with extensive guides, tutorials, and examples for learners enables students and practitioners to confidently take the first step into natural language processing in Python.

NLTK comes with fine-grained functions for tokenization, parsing, stemming, and POS tagging.

Pros and Cons of NLTK?

Pros

- Rich set of linguistic corpora and lexical resources.

- Beginner-friendly with lots of tutorials and examples.

- Highly flexible for academic and research-oriented tasks.

Cons

- Slow for large-scale text processing compared to modern libraries.

- Not well-suited for production or real-time NLP applications.

- Some tools and algorithms are less modern compared to newer libraries like Transformers or spaCy.

NLTK best suits teaching NLP concepts in classrooms and training programs, and hands-on experimentation with linguistic features.

4) Gensim

Gensim is a specialized NLP library in Python for topic modeling and vector space modeling. This open-source library focuses on unsupervised learning tasks such as identifying semantic relationships and extracting hidden topics from large text corpora.

Gensim’s efficient and memory-friendly design makes it powerful for working with massive datasets.

What are the advantages of Gensim?

Gensim specializes in topic modeling and embeddings. It excels in popular algorithms like Latent Dirichlet Allocation (DRA), doc2vec, and word2vec.

It is highly scalable and efficiently handles large corpora with streaming and incremental training. It seamlessly integrates with NumPy and other Python data libraries.

Pros and Cons of Gensim?

Pros

- Highly optimized for semantic modeling and similarity tasks.

- Scales well with large datasets.

- Provides a straightforward API for implementing complex algorithms.

- Strong support for popular word embedding techniques.

- An active community

Cons

- Not a full-stack NLP toolkit (no Named Entity Recognition, POS tagging, or parsing).

- Requires additional libraries (e.g., spaCy or NLTK) for broader NLP tasks.

Gensim best suits tasks like Topic Modeling (LDA), word2vec or doc2vec embeddings, and working with large-scale unstructured text data.

5) TextBlob

TextBlob is a beginner-friendly NLP library in Python that is designed to simplify common NLP tasks. Built on top of NLTK and Pattern, TextBlob abstracts away the complexity of traditional NLP workflows. This is what makes it popular for quick prototyping and small-scale apps.

What are the advantages of TextBlob?

TextBlob is great for beginners as it provides easy-to-use APIs for common tasks. It offers an intuitive API for tasks like sentiment analysis, part-of-speech tagging, and translation, which reduces setup time.

The user-friendly design allows users to perform powerful operations with minimal code.

Pros and Cons of TextBlob?

Pros

- Quick setup for NLP experiments and demos.

- Includes out-of-the-box support for sentiment analysis, translation, and tokenization, eliminating the need for extensive configuration.

- Great for educational projects or small-scale apps.

- Extremely beginner-friendly with a straightforward API.

Cons

- Limited performance and flexibility for complex tasks.Not suitable for production-grade or large-scale NLP applications.Depends on NLTK and Pattern, which may slow down certain workflows.

TextBlob is a great choice for developers and beginners looking to quickly prototype NLP Libraries Python applications, especially for sentiment analysis, translation, and tagging. However, consider the fact that it falls short in performance and flexibility for advanced or large-scale projects.

Read our blog The Best Python Frameworks

6) AllenNLP

AllenNLP is a research-first open-source deep learning NLP library developed by Allen Institute for AI. Built on top of PyTorch, AllenNLP is designed to support rapid prototyping and experimentation with Natural language processing in Python.

What are the advantages of AllenNLP?

The research-first design allows for exploration of state-of-art models and academic experiments. Since it is built on top of PyTorch, it offers the flexibility and modularity for building deep learning NLP pipelines.

It comes with prebuilt models that include implementations of popular architectures like BiDAF for Q&A and conference resolution.

Pros and Cons of AllenNLP?

Pros

- Strong support for cutting-edge NLP research.

- Modular and extensible design for custom deep learning models.

- Strong for tasks like identifying pronoun references in text.

- Active research community around academic NLP tasks.

Cons

- Steep learning curve for beginners.

- Heavy and resource-intensive for basic NLP tasks.

- Smaller community compared to Hugging Face Transformers.

AllenNLP is a good choice for research-focused projects that require extensibility. It also suits well for custom model experiment and advanced NLP tasks such as Question Answering, Semantic Role Labeling, and Coreference Resolution.

7) Stanza (Stanford NLP)

Stanza is a modern NLP library in Python developed by the Stanford NLP Group. This robust open-source library offers accurate and linguistically rich tools for text analysis. Built with deep learning at its core (PyTorch), Stanza offers strong multilingual support for over 70+ languages, which makes it popular among global NLP apps.

What are the advantages of Stanza?

Stanza offers multilingual coverage with pretrained models for 70+ languages. It delivers high accuracy on core NLP tasks. It leverages the Stanford NLP heritage, inheriting the well-regarded CoreNLP library.

Pros and Cons of Stanza?

Pros

- Strong focus on multilingual NLP tasks.

- Uses modern neural network architectures for high accuracy in tasks like POS tagging, NER, and dependency parsing.

- Supports a wide range of tasks, including tokenization, lemmatization, POS tagging, named entity recognition, and dependency parsing.

- Allows seamless integration with deep learning workflows and custom model training.Actively developed and backed by Stanford NLP research.

Cons

- Slower than spaCy for large-scale pipelines.

- GPU recommended for optimal performance.

- Less beginner-friendly compared to lightweight libraries like TextBlob.

Stanza is the best choice for NLP libraries Python tasks requiring strong multilingual support and high accuracy in POS tagging, NER, and dependency parsing.

8) Flair

Flair is a powerful NLP library in Python developed by Zalando Research with sequence labeling and text embeddings in mind.

The striking feature of Flair is its stacked embeddings which allows users to combine multiple embeddings to get highly accurate representations for downstream tasks.

What are the advantages of Flair?

The key strength of Flair is its ability to combine multiple embedding types, such as BERT, ELMo, GloVe, FastText, and Flair’s own contextual embeddings, for richer text representation.

This library performs well compared to other NLP libraries in Python in tasks like named entity recognition and sentiment analysis. It offers pretrained models for a wide range of languages. It balances ease of use with advanced capabilities by providing a simple interface for applying complex embeddings to common NLP tasks.

Pros and Cons of Flair?

Pros

- Easy to use with minimal setup.

- Supports combining multiple embeddings for better accuracy.

- Pretrained models are available for quick use.

Cons

- Easy to use with minimal setup.

- Supports combining multiple embeddings for better accuracy.

- Pretrained models are available for quick use.

Flair is an excellent choice for NLP Libraries Python tasks that require high-performance text classification and NER.

Schedule a call with ClickIT’s AI Developers to map your NLP use case

9) scikit-learn

Scikit-learn is a popularly used machine learning framework for the essential tools it offers for many NLP workflows. It uses traditional machine learning methods and feature extraction techniques like Bag-of-Words (BoW) and TF-IDF vectorizations that play a vital role in text classification and clustering tasks.

What are the advantages of scikit-learn?

The key strength of scikit-learn is that it combines robust tools like TF-IDF and Bag of Words vectorizers with traditional machine learning algorithms like SVM and Naive Bayes for effective text processing.

This open-source library is highly versatile and supports classification, regression, clustering, and dimensionality reduction. It is easy to set up for small to mid-sized NLP projects.

Pros and Cons of scikit-learn?

Pros

- Simple and efficient for classical NLP tasks.

- Lightweight, fast, and easy to use

- Excellent for educational use and prototyping.

- Huge community support and extensive documentation

Cons

- No deep learning support out of the box.

- Does not provide modern NLP features like NER, parsing, or embeddings

- Limited scalability for advanced, real-world NLP applications.

Scikit-learn is a great choice for NLP Libraries Python tasks that require lightweight, traditional machine learning approaches for text classification and clustering.

10) OpenNMT-py

OpenNMT is a leading open-source toolkit for neural machine translation (NMT) and sequence-to-sequence learning. OpenNMT-py is the PyTorch implementation of the OpenNMT ecosystem.

SYSTRAN, Ubiqus, and Harvard SEAS actively maintain it. OpenNMT-py is widely used in research and production for translation-focused applications.

What are the advantages of OpenNMT-py?

OpenNMT excels in building high-performance translation systems, with strong results in benchmarks like WMT19. Built on PyTorch, it seamlessly integrates with Python workflows with a user-friendly API.

It is optimized for large-scale training with multi-GPU support, which makes it suitable for production environments.

Pros and Cons of OpenNMT-py?

Pros

- Dedicated Python library within a well-supported ecosystem

- Flexible for custom model building and experimentation.

- Permissive licensing makes it ideal for commercial use without restrictions

- Strong community of researchers and developers

Cons

- Focuses on translation-related tasks and lacks support for traditional NLP tasks.

- Heavier setup compared to general-purpose NLP libraries.

- Requires familiarity with PyTorch and deep learning concepts for effective customization and training

OpenNMT-py is ideal for building translation systems for multiple language pairs like English-to-German and Spanish-to-English and sequence-to-sequence tasks like data-to-text and speech recognition.

Bonus Picks: Polyglot, FastText, VADER

Several other NLP libraries in Python come with their unique strengths. These bonus picks serve specialized use cases and can complement the main libraries that I covered above.

a) Polyglot

Polyglot is designed for multilingual NLP, supporting over 160 languages. It provides tools for tokenization, Named Entity Recognition, sentiment analysis, and word embeddings.

- Best for: Projects requiring broad multilingual coverage and quick prototyping

- Pros: Excellent language diversity, easy-to-use interface

- Cons: Smaller community, less active development compared to spaCy or Stanza

b) FastText (by Facebook AI)

FastText is a lightweight and efficient library for word embeddings and text classification. Developed by Facebook AI Research, it extends the word2vec approach by considering subword information that improves handling of rare and out-of-vocabulary words.

- Best for: Training custom embeddings and fast text classification.

- Pros: Extremely fast, supports supervised and unsupervised learning, good for rare words.

- Cons: Not a full NLP pipeline, limited to embeddings and classification tasks.

c) VADER (Valence Aware Dictionary and sEntiment Reasoner)

VADER is a rule-based sentiment analysis tool, especially effective for social media text like tweets, reviews, and short comments. It is part of the NLTK ecosystem and works well out of the box without heavy training.

- Best for: Sentiment analysis on social media and short informal texts.

- Pros: Lightweight, accurate for short text sentiment, and easy to use.

- Cons: Limited scope, only useful for sentiment-related tasks.

What is the Difference Between spaCy vs NLTK vs Hugging Face Transformers?

While the list above details many Python NLP Libraries, three frameworks currently dominate the landscape for Natural Language Processing in Python: NLTK, spaCy, and Hugging Face Transformers. Understanding their specialized roles is key to selecting the right tool for your project.

| Feature | NLTK (Natural Language Toolkit) | spaCy | Hugging Face Transformers |

| Primary Goal | Education, Research, Linguistic Analysis | Production-Ready Speed, Deployment | State-of-the-Art (SOTA) Deep Learning |

| Core Strength | Rich linguistic resources (corpora, lexicons) | Optimized speed for core tasks (NER, POS) | Access to thousands of pre-trained LLMs (BERT, GPT, etc.) |

| Ideal Use Case | Building custom academic algorithms, basic experiments | Enterprise APIs, real-time filtering, high-volume processing | Fine-tuning models for Q&A, advanced summarization |

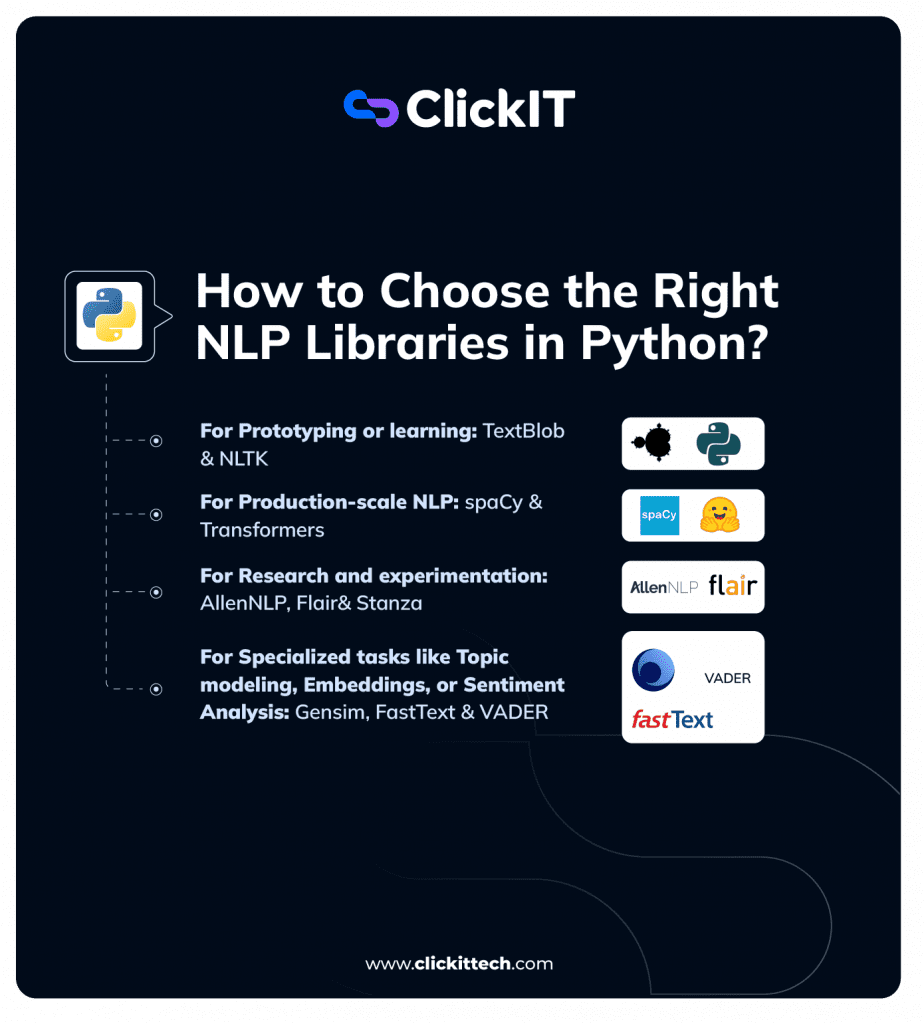

Final Verdict: How to Choose the Right Python NLP Library for Your Project?

Choosing the right natural language processing in Python largely depends on the use case like speed, scalability, multilingual support, or ease of use:

For Learning & Prototyping:

- Choose NLTK and TextBlob. They are beginner-friendly, offer simple APIs, and are excellent for academic study or initial experiments with fundamental NLP concepts.

For Production-Scale & High Speed:

- Choose spaCy. It is built for the enterprise, optimized for high speed, and ideal for building low-latency APIs used in real-time filtering, NER, and POS tagging.

For State-of-the-Art (SOTA) Accuracy & LLMs:

- Choose Hugging Face Transformers and AllenNLP. These provide access to the latest Large Language Models (LLMs) and are necessary for advanced tasks like complex summarization and Q&A.

For Multilingual Support:

- Choose Stanza or spaCy. Both offer pre-trained models for over 70 languages, ensuring high accuracy for global applications.

For Specialized, Unsupervised Tasks:

- Choose Gensim for topic modeling (LDA) and efficient word embeddings.

- Choose FastText for high-speed word embeddings and classification, especially when dealing with rare words.

- Choose VADER for quick, rule-based sentiment analysis on social media text.

For Custom Deep Learning Research:

- Choose AllenNLP or Flair. They are modular, PyTorch-based frameworks that give researchers the flexibility to build and experiment with custom, cutting-edge models.

The ClickIT Recommendation: A Hybrid Approach

The landscape of NLP Libraries in Python is constantly evolving due to the emergence of Large Language Models (LLMs) and the increasing demands for real-time applications.

For modern, enterprise-level solutions, we recommend a hybrid approach:

- Use spaCy for the fast, foundational tasks like tokenization and pre-processing.

- Integrate Hugging Face Transformers for the heavy-lifting, deep contextual analysis and fine-tuning.

By leveraging the right combination of these powerful Python NLP Libraries, our team builds scalable, intelligent applications ready for the future of AI.

FAQs About Python NLP Libraries

For beginners, TextBlob and NLTK are excellent starting points, as they offer simple APIs, extensive tutorials, and built-in datasets. They allow beginners to experiment with tokenization, sentiment analysis, and POS tagging with minimal setup.

Traditional libraries, such as NLTK or Scikit-learn vectorizers, struggle with unseen words. Modern libraries such as FastText and Transformers solve this by using subword embeddings or byte-pair encoding (BPE) that break words into smaller units.

Flair’s unique feature of stacked embeddings captures both word-level and subword-level semantics.

This approach delivers state-of-the-art performance for tasks such as text classification and NER, particularly in multilingual settings.

However, it comes at the cost of higher computational requirements.