Choosing between Google Gemini vs ChatGPT in 2026 is about understanding how each platform approaches scale, reasoning, and operational control.

Sometimes that means reviewing a large codebase. Other times, it means testing agent workflows, analyzing long research documents, or stress-testing structured outputs for production systems.

Context switching between models is part of the job now. While both Gemini and ChatGPT are general-purpose frontier models, each is optimized for different types of workflows, which determines when I reach for one over the other.

So, if you are a tech leader working to decide which AI model is the right choice for 2026, stick around as I cover the following:

Overview

- Gemini is designed to operate effectively across extremely large, multimodal inputs.

- ChatGPT is strongest when the task requires complex reasoning, structured output, and orchestration across tools.

- Gemini shines when you need to ingest and reason over massive multimodal data

- Teams embedded in Google’s stack benefit from Gemini’s deep integration, while those needing broader tooling and custom workflows find more flexibility with ChatGPT.

- Gemini’s pricing is bundled with Google One AI Premium and Workspace plans, which can lower costs for organizations already in that ecosystem.

- ChatGPT’s tiered, usage-based structure and broader enterprise options appeal to more tool-agnostic teams.

- Table of comparison between Google Gemini vs ChatGPT

- Gemini Features

- ChatGPT Features

- Gemini vs ChatGPT Full Comparison

- Gemini vs ChatGPT: Which Should You Choose for AI decision-makers?

- GPT vs Gemini: Speed, Latency, and Benchmark Performance

- FAQs about Gemini vs ChatGPT

Table of comparison between Google Gemini vs ChatGPT

With the release of GPT-5.2 in December 2025 in mind, I’ll be summarizing the key differences between Google Gemini vs ChatGPT for tech leaders, followed by a detailed breakdown of each category.

| Decision Factor | Recommended Platform |

| Best for productivity | Gemini |

| Best for dev and engineering | ChatGPT |

| Best for multimodal reasoning | Gemini |

| Best for long-context workflows | Gemini |

| Best for automation | ChatGPT |

| Best for cost-sensitive organizations | Depends on workload profile |

| Best for data governance | Tie, depends on your existing cloud stack |

| Best for scaling AI apps | ChatGPT |

| Reduces human overhead faster | ChatGPT |

| Vendor lock-in risk vs speed | Gemini favors lock-in, ChatGPT favors flexibility |

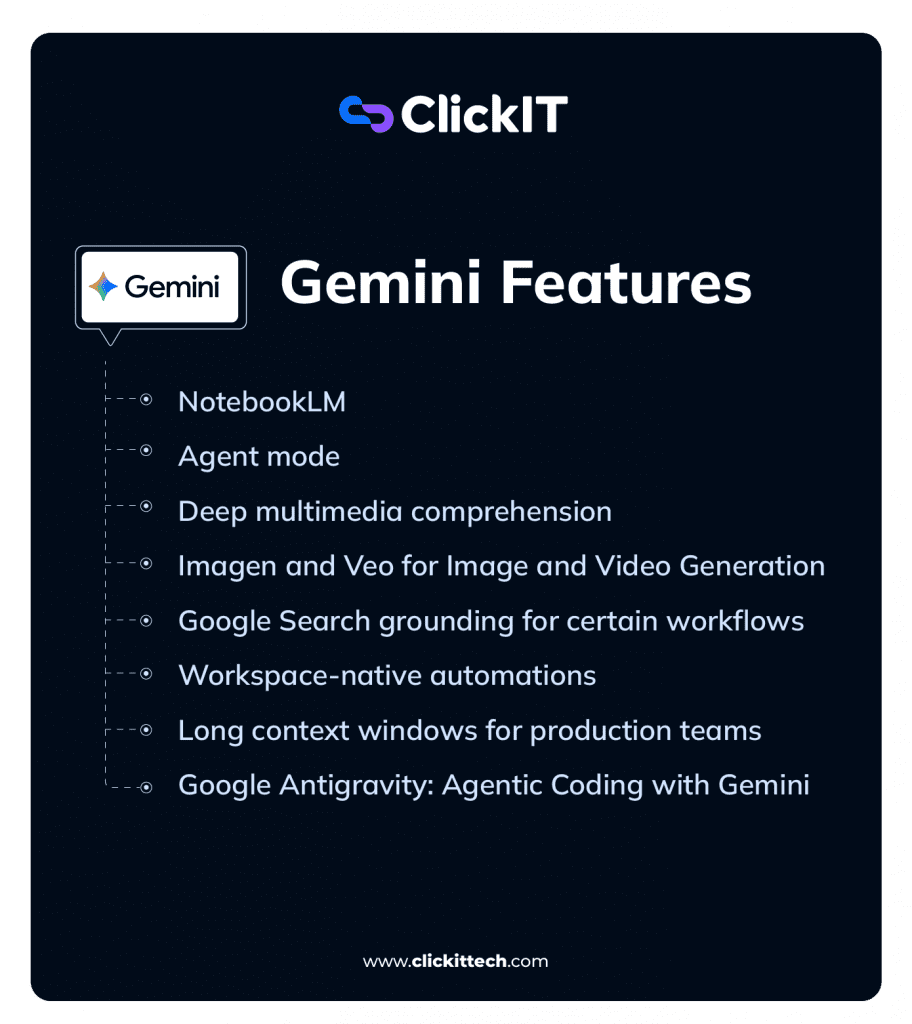

Gemini Features

Gemini’s features are built around its deep integration with Google’s ecosystem, allowing users to work across multiple Google services within a single AI-powered interface.

NotebookLM

The NotebookLM is an AI-powered research and writing assistant that creates personalized AI models from uploaded documents such as docs, PDFs, and web links. It operates on a corpus of user-provided documents, such as technical papers, API documentation, and project briefs, to create a controlled information environment.

Rather than relying on traditional search-and-retrieve methods, it leverages a massive long-context window to process entire documents at once, offering stronger global reasoning than a traditional Retrieval-Augmented Generation (RAG) model. Responses are primarily grounded in the uploaded sources, which significantly improves factual accuracy for document-heavy tasks.

This is a critical feature for technical accuracy, as it reduces hallucinations and makes it easier to trace generated outputs back to the underlying source material.

For example, NotebookLM is great for podcasters who want to interview a guest that recently published a book. By uploading the book, they can instantly generate a list of chapter-specific questions, identify the author’s core arguments, and even pull direct quotes to use in the interview, with every piece of information directly traceable to the source text.

Agent mode

Gemini’s agentic capabilities focus on native workflow automation within its ecosystem. The features are designed to orchestrate tasks across connected Google services.

For instance, I can issue a single directive for it to analyze raw data from a Google Sheet, reference a project outline in Google Docs, and then compose a summary email in Gmail.

This approach leverages the unified context of a user’s workspace to perform complex, multi-application processes.

Canvas

Canvas provides a dedicated side-panel workspace for iterative projects like coding or documentation. This structure separates the project artifact from the linear chat history, allowing for real-time edits and a persistent view of the work.

For a developer like me, this is a more organized environment for tasks like code refactoring, where maintaining a clean, isolated view of the current code version is essential for clarity.

Deep multimedia comprehension

Gemini is a natively multimodal model, and this enables it to reason across different data formats simultaneously from the ground up. For instance, I can provide it with a PNG of a performance dashboard along with the raw CSV data that generated the chart.

It can then synthesize insights using both the visual and tabular information in a single process. This ability to fuse data from different modalities is a core architectural feature.

Imagen and Veo for Image and Video Generation

Gemini includes native image and video generation via Imagen and Veo, fully integrated into its multimodal reasoning stack.

The Imagen family powers Gemini’s image capabilities. Internally, these are codenamed Nano Banana (Gemini 2.5 Flash Image) and Nano Banana Pro (Gemini 3 Pro Image). I use Nano Banana Pro to quickly visualize prototypes, turn handwritten notes into diagrams, or generate infographics for complex data. All generated images include an invisible SynthID watermark, which is important for compliance and content tracking.

Additionally, Gemini supports conversational image generation, allowing iterative refinement over multiple turns. Gemini 3 Pro Image (gemini-3-pro-image-preview) is optimized for professional workflows, offering high-resolution output (1K–4K), multi-turn generation, up to 14 reference images, Google Search grounding, and a “thinking mode” for complex prompts.

For video, Veo 3.1 generates high-quality short clips with native audio. I’ve used it for text-to-video creation and photo-to-video, turning a single image into an 8-second animated clip, with cinematic control over motion and composition. Veo 3.1 is accessible via Google Flow, Gemini App/API, Vertex AI, and third-party integrations like invideo AI and fal.ai.

For creatives, combining Gemini’s reasoning with image and video generation practically speeds iterative creative workflows. For example, I can generate a storyboard from a text outline, improve the framing with Nano Banana Pro, and export short clips with Veo. And I don’t have to leave the Gemini ecosystem.

Google Search grounding for certain workflows

To provide current and verifiable information, Gemini uses Google Search grounding. When a prompt requires knowledge outside of its training data, the model programmatically generates and executes a search query.

It then synthesizes the search results with its internal knowledge to formulate a response. For technical validation, the API returns groundingMetadata that includes the source URIs. This mechanism connects the model’s reasoning to the live web, making its responses both timely and citable.

Workspace-native automations

Gemini’s agentic capabilities are expressed as workspace-native automations. These features are designed to orchestrate tasks across connected Google services like Docs, Sheets, and Gmail.

For instance, I can issue a single directive for it to analyze raw data from a spreadsheet, pull context from a Drive document, and draft a project update email.

The system can also combine information from a user’s private Workspace content with data browsed from external websites to generate comprehensive reports. This approach leverages the unified context of a user’s data to perform complex, multi-application processes.

Long context windows for production teams

Gemini supports extremely large context windows across its various models, enabling deep reasoning over long documents or datasets.

For example, Gemini 3 can process up to 1 million tokens in a single conversation, which is around 700,000 words. Gemini 1.5 Pro has a 2 million token window, while models such as Gemini 2.5 Pro and Flash generally handle around 1 million tokens. Certain API deployments provide access to expanded context windows of up to 2 million tokens for specialized use cases. Internally, Google DeepMind researchers have reported experiments reaching 10 million tokens, demonstrating the potential scale for advanced document- and data-heavy workflows.

This large context capacity allows me to load an entire monolithic codebase for a dependency analysis or input a full year of project meeting transcripts to identify key decision points. The model’s ability to maintain coherence across such a large volume of information ensures a scale of analysis that was previously impractical.

To make these massive context windows practical, Gemini supports context caching, which dramatically reduces latency and computational costs for repeated queries. Devs can persist processed context from large documents or codebases. There are two types: implicit caching, enabled by default, which automatically discounts repeated content (up to 90% for supported models) without storage fees; and explicit caching, which allows developers to manually cache content, control its time-to-live, and reference it in subsequent prompts for additional cost savings.

Context caching is especially useful for my workflows that repeatedly query large, mostly static datasets, such as chatbots with extensive instructions, recurring document analysis, or frequent codebase inspections. Supported models include Gemini 3 Flash, Gemini 3 Pro, Gemini 2.5 Pro/Flash, and Gemini 2.0 Flash. Minimum cacheable content is 2,048 tokens, and caches can hold up to 10 MB, making this a practical optimization for large-scale, document-heavy workflows

Google Antigravity: Agentic Coding with Gemini

Google Antigravity is an AI-first IDE built around Gemini 3 models, designed to accelerate software development through autonomous agents. Released in November 2025, it’s still early-stage, so I’d recommend treating its outputs as suggestions rather than final code.

Antigravity is agent-first: multiple AI agents can simultaneously plan, write, test, and validate features with minimal human input. The IDE integrates editor, terminal, and browser access, allowing agents to search documentation, run code, and test applications autonomously.

Its multi-agent workflow and task-based abstractions can help monitor agent progress, verify outputs, and maintain control across complex projects. During its preview, devs can access models like Gemini 3 Pro and Claude 3.5 Sonnet. Antigravity shows the potential of agentic coding, but human oversight remains critical for production-grade reliability.

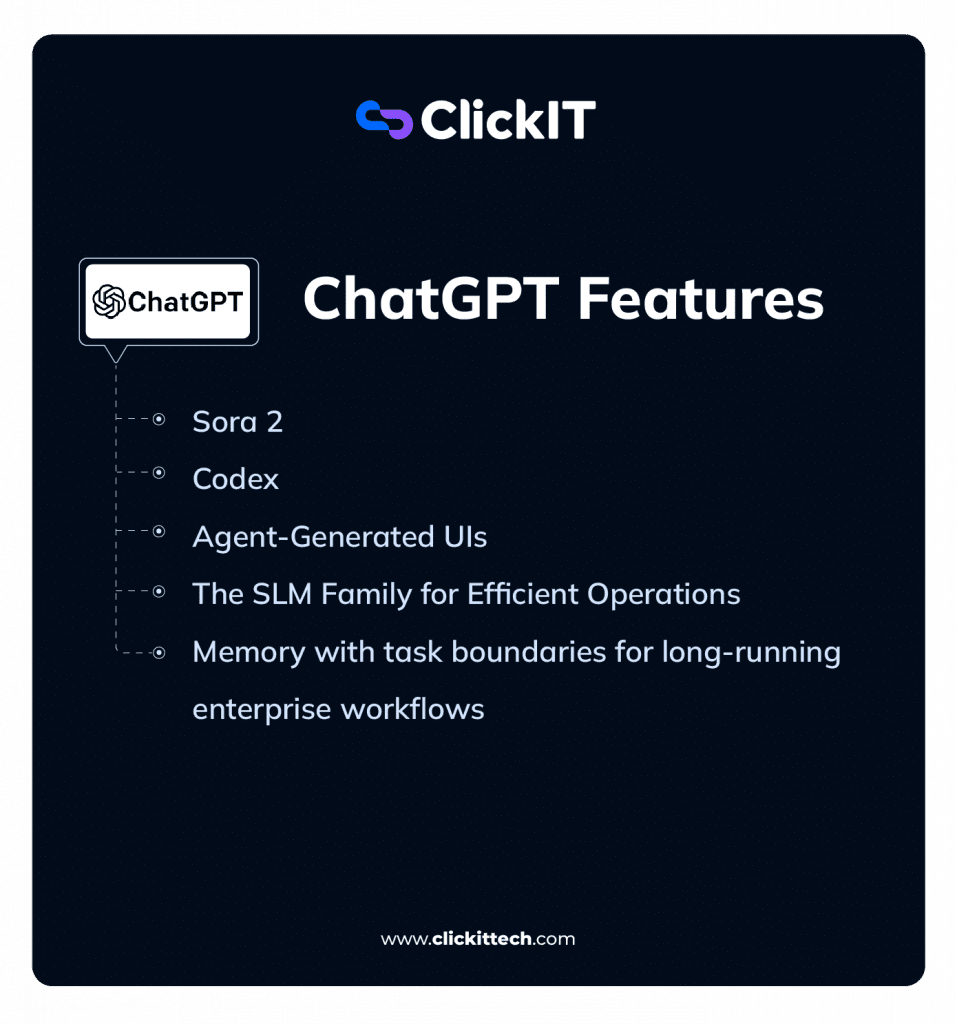

ChatGPT Features

ChatGPT’s capabilities are delivered through a set of powerful, distinct features. These tools provide a different kind of leverage in my workflow, focusing on automation, customization, and interactive problem-solving.

Sora 2

Sora 2 is OpenAI’s model for generating high-fidelity, physically coherent video from text prompts. I use this for creating technical prototypes and visualizing complex concepts.

For example, I can generate a short video demonstrating a new UI/UX flow or create an animated architectural diagram to explain a system’s data flow. The model’s ability to maintain object permanence and simulate physics allows for realistic technical demonstrations. The “Cameo” functionality also lets me insert specific likenesses into the generated videos, which is useful for creating personalized training materials.

Codex

Codex is an AI coding agent that helps devs like me write, review, and ship code faster. I integrate it directly into my development workflow by authorizing it to access a specific codebase, where it functions as a virtual team member within my existing tools, like my IDE and terminal.

It is powered by specialized models like GPT-5.2-Codex, which gives it a deep, contextual understanding of the project. Additionally, I can use it to write unit tests for a new function, refactor a complex module, or draft a pull request with a detailed description. All of its operations, like running code or debugging, are performed within a secure, cloud-based sandbox environment.

Agent-Generated UIs

This is an advanced capability where the AI agent generates a streaming, interactive user interface as its output, instead of just text. For my work, this means I can ask the agent to monitor a system’s health, and it can generate a real-time dashboard UI to display the metrics.

This AG-UI maintains a shared state with the agent’s backend logic, so my interactions with the interface can trigger further actions from the agent. It moves beyond conversational AI to AI-generated interactive applications.

Multi-Agent Workflows

For automating the entire software development lifecycle, I can configure multi-agent workflows. This involves chaining specialized agents together to perform a sequence of tasks.

A typical pipeline I use involves a “Planner” agent to break down a feature request, a “Retrieval” agent to gather relevant code from the repository, a “Generation” agent to write the new code, a “Testing” agent to run validation, and finally a “Deployment” agent to initiate the CI/CD process. This orchestrates a full development cycle with minimal human intervention.

The SLM Family for Efficient Operations

In addition to its flagship models, OpenAI provides a range of smaller, cost-efficient models optimized for specific tasks. These are often distilled or fine-tuned variants designed for speed, scale, and lower operational cost.

I use these for lightweight, low-latency, and low-cost use cases where the full power of GPT-5 is not required. For example, I deploy SLMs for tasks like routing incoming support tickets, classifying user feedback, or powering simple, high-volume chatbots. Their efficiency makes them ideal for production tasks that require speed and cost-effectiveness.

Memory with task boundaries for long-running enterprise workflows

The memory feature has evolved to support long-running, concurrent enterprise workflows. It now uses task boundaries, which allows the AI to maintain separate, isolated memory contexts for different projects. This is super important for my work, as it ensures that the AI’s knowledge of my “Project A” codebase does not bleed into its suggestions for “Project B.”

This is not perfect yet, as subtle bleed-through of high-level concepts can occur, particularly when projects are closely related or share a common tech stack.

However, it’s largely effective, and this contextual integrity is a requirement for using an AI assistant across multiple, complex engineering projects simultaneously.

Gemini vs ChatGPT Full Comparison

| Category | Gemini | ChatGPT |

| Use case | Best suited for large-scale context ingestion, multimodal analysis, and document-heavy workflows. Strong fit for teams working with long reports, design artifacts, or complex datasets that need to be reasoned over as a whole. | Best for multi-step task automation, creative writing, complex coding, and structured research. |

| Natural Language understanding capabilities | Strong at maintaining coherence across very large inputs, especially when text is mixed with images, tables, or diagrams. It has a 1-million-token context window. | Great at instruction-following and reasoning depth. It reliably handles nuanced constraints, structured prompts, and complex task definitions that evolve over multiple steps. Its voice dictation also has superior accuracy. |

| Agent technical capabilities & integration | Provides agent-like functions primarily within Google Workspace, enabling task assistance across Docs, Sheets, and Gmail with limited external autonomy. | Supports explicit agent workflows with planning, tool execution, code running, and iterative reasoning. These capabilities enable complex, multi-step automation. In my own testing, this reduces manual oversight when building automation-heavy systems. |

| Pricing | Bundled with Google One AI Premium ($19.99/mo) and integrated into Google Workspace business plans. An ecosystem-centric pricing model. | A tiered subscription structure: Free, Plus ($20/mo), Pro ($200/mo), and custom Enterprise plans. A usage-based, feature-gated model. |

| Security | Security aligns with Google Cloud’s enterprise posture (VPC-SC, IAM). For large organizations, the real value is integration with existing identity, access control, and data governance frameworks already in place on GCP. | Enterprise plans focus on data isolation, configurable retention, and administrative visibility. For teams deploying AI across multiple tools and vendors, this flexibility matters more than brand-level compliance badges. |

| Number of Users | Google Gemini has over 450 million monthly active users and about 35 million daily users, and continues to grow due to its Google Workspace integration. | As of late 2025, ChatGPT remains the largest AI chatbot by user base, with 700 million weekly active users and 190+ million daily users. |

| Latency Consistency (p95) | In my testing, I experienced lower latency for multimodal tasks like image generation and editing, which I believe is due to its optimized architecture for processing visual data. | In my testing, GPT is slightly faster in pure text-based conversational exchanges, providing a more fluid feel for rapid back-and-forth dialogue. |

| Tool-Calling Accuracy | Leverages its large context window to perform deep research before executing a tool call, though it may require more precise prompting for consistent outputs. | Highly reliable in producing well-structured, accurate output (e.g., clean JSON) for complex, multi-step agentic workflows. |

| Operating Philosophy & Autonomy | Functions more like an ambient assistant that augments human decision-making. It enhances human workflows by adding context and insight, but typically stops short of full autonomy. In specialized environments like Google Antigravity, Gemini 3 can act more autonomously, planning and executing multi-step coding tasks, though it’s still a developer-focused preview feature. | Built around agentic execution. The model is designed to act, observe results, and adjust behavior with minimal human intervention. |

| Prompt Determinism & Reproducibility | Non-deterministic by nature. Offers temperature and seed parameters to control randomness, achieving high reproducibility rates comparable to competitors. | Also non-deterministic. Provides temperature and seed parameters, allowing developers to achieve highly consistent and reproducible outputs for testing and production. |

Gemini vs ChatGPT: Which Should You Choose for AI decision-makers?

Gemini vs ChatGPT: Which Should You Choose for AI decision-makers?

| Decision Factor | Choose Gemini if… | Choose ChatGPT if… |

| Best for Productivity | Your organization is heavily invested in the Google Workspace ecosystem. Its native integrations create a seamless, low-friction workflow. | Your team uses a diverse, platform-agnostic tech stack. Its strength is in connecting and automating tasks across various third-party applications. |

| Best for Dev & Engineering | Your primary need is large-scale code analysis and understanding monolithic codebases, leveraging their massive context window for deep inspection. | Your focus is on the active development lifecycle. Its Codex agent and multi-agent workflows excel at writing, testing, and deploying code. |

| Best for Multimodal Reasoning | You require fast, real-time multimodal interaction, such as generating or editing images, or analyzing charts and data simultaneously. | You need high-fidelity, cinematic video generation for presentations or marketing, leveraging Sora 2’s specialized capabilities. |

| Best for Long-Context Workflows | Your workflows depend on processing and maintaining coherence across extremely large documents, such as legal contracts, research papers, or books. | Your workflows require deep conversational memory and ongoing personalization. |

| Best for Automation | You want to automate tasks within the Google ecosystem, like orchestrating workflows between Sheets, Docs, and Gmail. | You need to build end-to-end automations that interact with the open web and external applications using its explicit Agent Mode. |

| Best for Cost-Sensitive Organizations | You are already a Google Workspace customer, as its cost is bundled into existing enterprise plans, providing predictable expenses. | You are building custom applications and need granular cost control at the API level, using smaller, efficient SLMs for high-volume tasks. |

| Best for Data Governance | Your company’s security posture is already built on Google Cloud’s infrastructure, allowing you to extend existing IAM and VPC controls. | You require specific compliance certifications like SOC 2 Type 2 and need fine-grained control over data retention policies for enterprise data. |

| Best for Scaling AI Apps | You are scaling within the Google Cloud Platform (GCP) and can leverage its integrated infrastructure for predictable performance. | You are building for a diverse market and can leverage its mature developer ecosystem, extensive APIs, and varied model family (including SLMs). |

| Which Reduces Human Overhead Faster between Gemini vs ChatGPT? | Gemini reduces overhead for research and synthesis tasks, acting as a powerful analyst for large datasets and documents. | ChatGPT reduces overhead for entire operational workflows, acting as an autonomous executor of complex, multi-step processes. |

| Vendor Lock-in vs. Deployment Speed | You accept a higher risk of ecosystem lock-in in exchange for extremely fast deployment and deep integration within Google Workspace. | You prioritize platform independence and lower lock-in risk, accepting that custom, integrated solutions may require more initial setup. |

GPT vs Gemini: Speed, Latency and Benchmark Performance

In my experience, for casual or office use (typing questions in a chat interface), both ChatGPT 5.2 and Google Gemini 3 feel very fast. A one-second difference is hardly noticeable. However, in latency-sensitive environments like customer-facing apps or real-time assistants, Gemini 3 Flash’s ultra-low latency has a clear advantage. But ChatGPT 5.2 is certainly not slow, and its speed keeps improving, but Google has prioritized handling massive scale and real-time interaction efficiently.

ChatGPT 5.2 has two modes in the chat interface: Instant for quick replies and Thinking for more complex reasoning. Instant mode typically starts delivering answers within 2–5 seconds, streaming tokens smoothly at roughly 30–50 tokens per second. Thinking mode has a slight pause for complex queries, but I noticed this usually improves accuracy. On the API side, ChatGPT can stream responses token by token and scale for enterprise workloads, though network conditions and location can affect latency.

Google Gemini 3 is engineered for speed, with the Flash variant designed to start responding almost instantly. Time-to-first-token is extremely low, making it ideal for applications where every millisecond counts, like voice assistants or live chatbots. Even for long outputs, streaming is fast and smooth, and Google’s cloud infrastructure allows many concurrent requests with minimal latency.

In practice, I’ve noticed that Gemini often feels “instantaneous,” whereas ChatGPT is “very fast.” And this difference only becomes important in latency-critical scenarios. Both models support streaming responses through their APIs. Gemini’s efficiency also translates into cost savings because faster response times reduce compute usage per query.

Here’s a table summary

| Aspect | ChatGPT 5.2 (Instant Mode) | Google Gemini 3 (Flash Mode) |

| Latency (short queries) | 2–5 seconds | <2 seconds, often near-instant |

| Streaming Output | Yes, token-by-token | Yes, extremely rapid token streaming |

| Throughput | High (~30–50 tokens/sec) | Very high, designed for large-scale parallel requests |

| Optimized Modes | Instant (speed), Thinking (depth) | Flash (speed), Pro (slightly slower, still fast) |

| Use Case Fit | Interactive chat, small delays acceptable | Real-time apps (voice, live chat), minimal lag |

Benchmark Performance for Gemini vs ChatGPT

When it comes to benchmarks, both models are state-of-the-art, with strengths in different areas:

- Knowledge & Reasoning: ChatGPT 5.2 generally leads slightly on MMLU, HumanEval, and advanced reasoning benchmarks like ARC-AGI, reflecting strong logical and coding abilities. (Source)

- Multimodal Performance: Gemini 3 excels in tasks combining text, images, and video, with high scores on Video-MMMU and related tests. This fits its multimodal design focus. (Source)

- Coding: ChatGPT 5.2 maintains an edge in pure text-based coding benchmarks (HumanEval, IOI, Terminal-bench, SWE-bench, and even Vibe Code Bench), while Gemini performs very well in visual coding tasks or when integrating with Google ecosystem data. (Source)

- Advanced Math & Science: Both models achieve near-human or superhuman performance, with minor differences favoring ChatGPT in some reasoning-heavy math tasks. (Source)

In practical terms, I would recommend ChatGPT 5.2 for complex reasoning or code-heavy workflows where correctness is important. Gemini 3 becomes my go-to when projects involve multimodal content, rapid response requirements, or tight integration with Google services. For your most day-to-day use, either model feels exceptionally capable, far surpassing previous generations.

FAQs about Gemini vs ChatGPT

The primary difference is architectural. Gemini is designed for massive, multimodal data ingestion and deep integration within the Google Workspace ecosystem, leveraging Google Search for real-time, grounded information. ChatGPT is built for autonomous agentic capabilities and developer extensibility, excelling at complex conversational workflows, creative generation, and specialized software engineering tasks via its Codex models.

Neither is universally superior; the better choice is determined by the technical requirements of the task. For workflows deeply embedded in the Google ecosystem that require real-time, multimodal reasoning (e.g., analyzing data in Sheets and Docs simultaneously), Gemini is the more integrated solution. For tasks requiring creative flexibility, platform-agnostic automation, and access to a mature ecosystem of custom tools (GPTs), ChatGPT is generally the more powerful option.

No single AI model is universally “better.” The best choice depends on the specific use case. Several models exhibit strong performance in specialized domains:

Claude 3 vs GPT: Claude usually excels at processing and summarizing extremely long documents and in complex, nuanced creative writing.

Perplexity vs OpenAI: Good conversational answer engine. It excels at research tasks where direct, verifiable source citations are necessary.

Microsoft Copilot: The best choice for users deeply embedded in the Microsoft 365 ecosystem, offering strong integration with tools like Teams, Outlook, and Office.

Going into 2026, Google Gemini has over 450 million monthly active users and 35 million daily users. ChatGPT has over 700 million weekly active users, 190+ million daily users, and over 5.7 billion monthly visits, making it the largest AI chatbot by user base.