Have you ever wondered what it would feel like to build a Full-stack App with AI by simply typing out an idea?

Not too long ago, this sounded unrealistic. Today, it feels surprisingly close. We’re no longer talking about basic AI Agents that just autocomplete code. We’re now seeing autonomous AI systems that can spin up infrastructure, design databases, and build real interfaces, almost like having a silent engineering team working for you in the background.

But let’s be honest for a moment:

- Can you really create a Full-Stack App with AI using just a single prompt, or is the industry overselling the dream?

In this blog, we’ll look at how AI actually builds software, why building a Full-Stack App with AI still needs strong human direction, and what this shift really means for developers.

- Myth vs. Reality: Is it possible to build an app in a “One-Prompt”?

- How Does AI Actually Build a Full-Stack App?

- Why AI-Generated Code Still Requires Human Governance?

- Myth vs. Reality: Will AI Replace the Full-Stack Developer?

- FAQs

Myth vs. Reality: Is it possible to build an app in a “One-Prompt”?

Let’s be honest: the idea is lovely. You type something like, “Build me a task management app,” hit Enter, and within seconds, you have a flawless, production-ready Full Stack App with AI, often marketed as a ready-made Full-stack AI app. That’s the myth.

The reality is very different. Real software isn’t just code on the screen. It is hundreds of small decisions acting behind the scenes: how data is stored, how users are authenticated, how errors are handled, how security is enforced, and how the system behaves under real-world load.

Yes, AI can generate a working prototype from a single prompt. It’s great for demos and quick experiments, and honestly, it feels magical the first time you see it. But those “one-prompt apps” usually skip over the hard parts:

- Proper security controls

- Clean data relationships

- Scalable architecture

- Thoughtful user experience

In real life, apps aren’t built in one shot: they’re built in iterations. You guide the idea, AI speeds up the work, and both evolve the product together through constant feedback.

So, is a one-prompt app possible?

You can get a quick demo. But if you want something stable, secure, and production-ready, it still takes real thinking, not just a single prompt.

How Does AI Actually Build a Full-Stack App?

Despite what social media makes it look like, AI does not magically build a Full-Stack App from a single prompt; it is not a “type once and deploy” experience. What really happens is much more practical and honestly much more interesting.

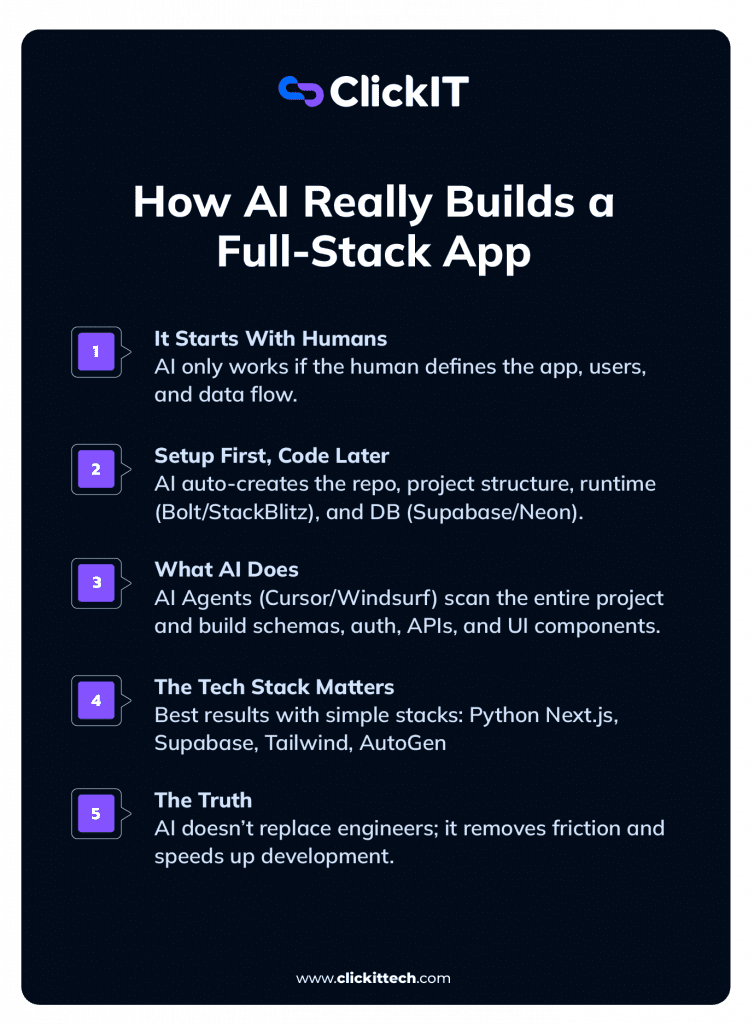

It always begins with humans. Before AI writes meaningful code, a human has to think through the basics:

- What is this application supposed to do?

- Who will use it?

- How will data flow through the system?

- What can go wrong?

The real foundation is that mental model. If that’s missing, then AI just produces bits of code that are disconnected and don’t hold up in real-world use.

In reality, building a Full-stack AI app still depends heavily on how clearly the human frames the system. Without solid planning, the Full-Stack AI output looks impressive at first but fails under real-world usage.

The Setup Comes Before the Code

Modern AI tools don’t jump into writing business logic straight away; they prepare the environment first. This step usually takes the form:

- Creating a new code repository

- Setting up the project’s structure

- Launching a browser-based runtime via tools such as Bolt.new or StackBlitz WebContainers that instantly create an environment within the browser.

- Setting up a database using services like Supabase or Neon

- Applying some basic CI/CD configuration

These tools don’t require a developer to explicitly set up any servers anymore. Instead, they create a development environment in the browser instantaneously and interactively, allowing you to see an app come to life in real time as the AI writes code.

This part feels invisible, but it is also the most important. Once the foundation is clean, everything built on top of it becomes easier to reason about.

Where AI Really Shines

Agent-Based Execution: This is the part that really feels like magic.

Modern AI-powered IDEs, like Cursor – Composer Mode and Windsurf – Cascade Flow, don’t just paste code from a chat window. They work as autonomous agents, often using technologies like the Model Context Protocol (MCP), which lets them scan the whole codebase, understand the structure of the project, and pull in external documentation before they’ve written a single line of code.

These agents can plan multi-step work, and can also run tasks in parallel. These Agents can even validate their own output before moving to the next step. With this full-project context, AI agents can reliably handle tasks as follows:

- Design database schemes directly from plain English descriptions

- Adding access control rules like Row Level Security (RLS).

- Building complete authentication flows like – login, signup, middleware

- Creating backend APIs with proper data validation

- Handling edge cases and error states

- Generating clean, re-usable UI components using Tailwind and shadcn/ui

This is where full-stack AI applications become truly practical, and where AI development stops feeling like a demo and starts feeling like a real productivity boost.

So, instead of fighting with boilerplate, the developers can focus on intent and architecture while the AI takes care of the repetitive, mechanical work.

Why some tech stacks work better with AI

Not all technology stacks work well with AI, and that is perfectly fine. Choosing the right stack is essential when building a Full-Stack App with AI, since structured tools allow the AI to behave in a more predictable manner.

Generally speaking, AI performs significantly better on simple, opinionated stacks designed with AI workloads in mind. That is why things like Next.js or SvelteKit, along with databases like Supabase or Neon, and styling tools such as Tailwind and shadcn/ui usually work. They reduce the noise, limit configuration overhead, and give AI clear patterns to follow.

In truly AI-driven applications, the backend is often divided according to its responsibilities. While Node.js handles the web layer, AI orchestration, such as running RAG, calling models, and handling embeddings, would be delegated to Python-based frameworks like FastAPI. This division makes the system more reliable, and easier to reason about for AI tools.

On the frontend and streaming side, tools like the Vercel AI SDK have become a standard to handle real-time token streaming from server to client without forcing the developer to manually manage low-level WebSocket logic.

When there’s less boilerplate and more structure, a few good things naturally happen:

- Output of the AI is much cleaner and consistent.

- Code becomes easier for developers to review and extend

- Teams move faster and iterate with more confidence.

That is, the more straightforward and organized the stack, the better AI performs.

The Honest Truth

AI doesn’t replace engineers. It removes friction. It accelerates the parts that used to slow us down and gives developers more time to think, design, and make better decisions. Still, the human is responsible for the system, risks, and long-term outcomes.

That’s how AI really builds Full-Stack Apps with AI today: Not by replacing people, but by helping them build faster and smarter.

Why AI-Generated Code Still Requires Human Governance?

Whereas AI can write code incredibly fast, it doesn’t really understand what your product, users, and business need. It works from patterns, not real-world responsibility. That is why human oversight is not optional: it’s critical. Without it, teams risk shipping software that looks fine on the surface but breaks in the real world.

The Productivity Paradox

AI is supposed to save time, and often does. But there’s a hidden cost. A common experience for today’s developer is this:

- The code “almost works.”

- It compiles. It runs. It looks right.

But something doesn’t feel quite right – a missed edge case, a silent failure, or a subtle performance problem.

The 2025 Stack Overflow Developer Survey backs this up.

- 66% of developers reported that the biggest frustration with AI was that “solutions are almost right, but not quite”, and 45% say debugging AI-generated code takes more time compared to writing it themselves.

- Trust is shaky, too: only 3.1% “highly trust” AI-generated code, while 46% actively distrust its accuracy.

So, while developers spend less time typing, they often spend more time reviewing and fixing AI-generated code. That’s the paradox: development feels faster, but trust becomes lower unless experienced humans stay in control.

Code Review Alone Is Not Enough

Traditional governance relies heavily on code reviews and tests. For AI-driven systems, that is no longer sufficient.

Modern AI applications also require LLM observability — the ability to trace what the model saw, what it returned, and how long it took to respond. Without this, teams are blind to some of the most critical failure modes.

Tools like LangSmith and Arize Phoenix make this possible by providing:

- Tracing of model inputs and outputs

- Latency and performance monitoring

- Error and hallucination tracking

- Real-time visibility into how AI logic behaves in production

This kind of visibility is essential because AI-generated code often omits proper instrumentation by default.

Technical and Security Risks

AI does not think like an attacker; it does not understand real-world threat models. It simply tries to make things functional.

That’s why AI-generated code can quietly introduce risks like:

- Exposed API keys and secrets

- Weak authentication logic

- Missing validation for user inputs

- Insecure database access rules

- Poor logging and error handling

That doesn’t mean AI is bad at coding; it just means it doesn’t understand risk like humans do. Humans have to be the last line of defense in security.

Organizational and Regulatory Governance

Code doesn’t live in isolation. It lives inside organizations with legal, regulatory, and compliance responsibilities.

AI cannot:

- Take responsibility during audits

- Understand legal data handling requirements

- Decide how long data should be retained

- Manage compliance standards like GDPR or SOC 2

- Only humans and strong governance processes can do that. AI can assist, but it cannot be accountable.

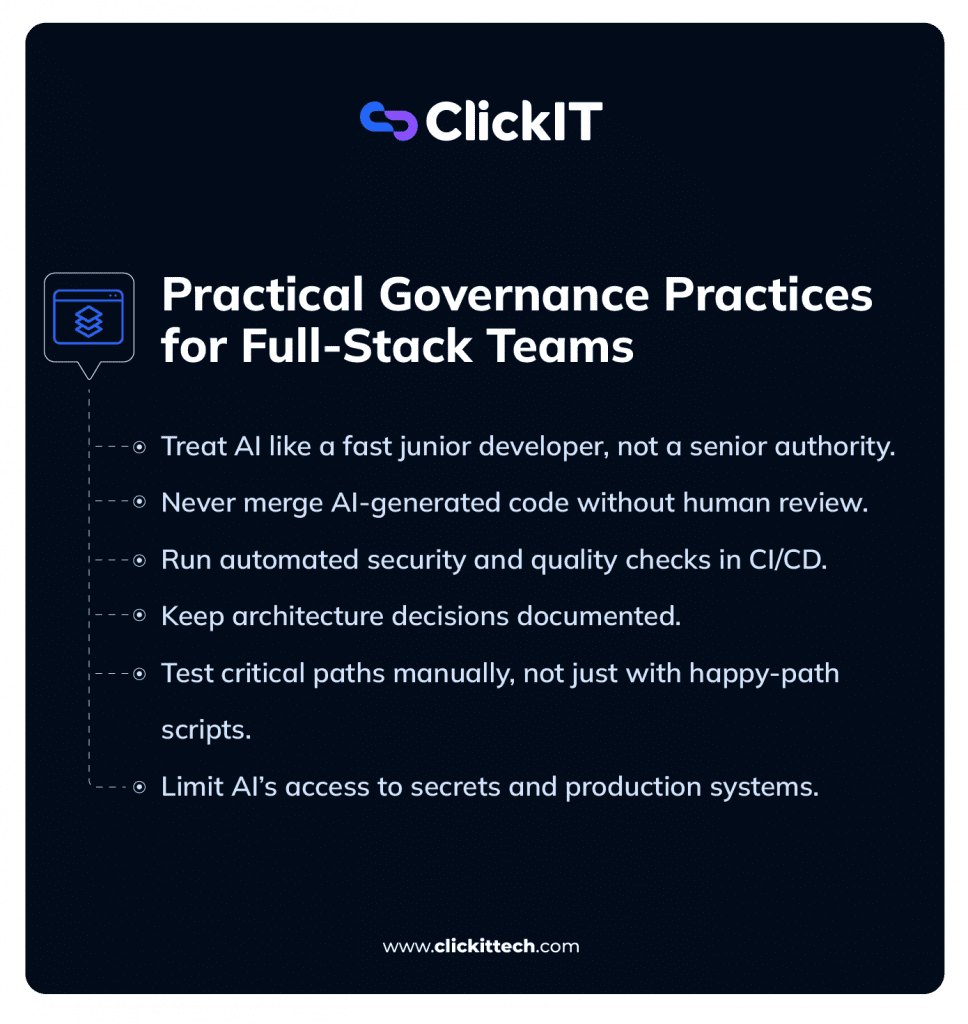

Practical Governance Practices for Full-Stack Teams

The best teams don’t reject AI: they use it carefully. Some practical habits that work well in real teams:

- Treat AI like a fast junior developer, not a senior authority

- Never merge AI-generated code without human review

- Run automated security and quality checks in CI/CD

- Keep architecture decisions documented

- Test critical paths manually, not just with happy-path scripts

- Limit AI’s access to secrets and production systems

Used wisely, AI becomes a powerful multiplier. Used blindly, it becomes a silent risk.

The difference is human governance.

Myth vs. Reality: Will AI Replace the Full-Stack Developer?

This is probably the biggest question in every developer’s mind right now:

- Will AI take my job?

The myth says: Yes. Fully. Completely. Soon.

The reality is far more practical and far more interesting.

AI is not replacing full-stack developers. It’s changing what being a “good” developer actually means. It’s becoming a powerful accelerator and assistant.

Today, AI is incredibly good at handling repetitive work: generating boilerplate code, scaffolding projects, building CRUD APIs, and even creating basic UI components. Tasks that used to take hours can now be done in minutes.

But here’s what AI still can’t do well.

- It can’t deeply understand business context.

- It can’t negotiate trade-offs between performance, cost, and scalability.

- It can’t design systems with long-term thinking around reliability, concurrency, and distributed architecture.

- And it definitely can’t sit in a meeting and translate vague, messy human requirements into clean technical decisions.

That’s where full-stack developers become more valuable, not less.

The role is shifting from “code writer” to system thinker and strategic oversight. From “feature builder” to architect and decision-maker.

The highest-value skills going forward won’t be typing speed. They’ll be:

- Defining clean architectures

- Asking better questions

- Reviewing and governing AI-generated code

- Translating abstract product ideas into concrete technical execution

The future isn’t about Humans versus AI. It’s about humans who understand how to build a Full-stack AI App and leverage Full-Stack AI tools combined with systems thinking to solve real problems faster.

In short:

AI won’t replace Full-Stack App Developers.

But full-stack developers who learn to work with AI will absolutely replace those who don’t.

The Full-Stack App with AI is no longer an idea for the far future but a reality today. But the reality is far more balanced than the hype.

AI isn’t a magic machine that turns one prompt into a flawless production system. What it does exceptionally well is accelerate the boring parts, reduce friction, and give developers more space to think, design, experiment, and innovate.

AI is about changing development, but it doesn’t remove developers. Rather, the role shifts from writing every line of code to shaping the system, guiding decisions, reviewing the output of AI, and ensuring that the product is secure, reliable, and fits real-world needs.

The teams that will go faster in the future are those that neither ignore AI nor blindly trust it. It is a team that knows how to work with AI, regards it as a powerful partner, and combines its speed with human judgment and creativity.

If there’s one takeaway, it’s this: AI will not replace Full-Stack App developers. But Full-Stack App developers who master AI will build things that were never possible before.

FAQs

With AI alone, a working prototype can be generated very fast, but not a fully polished production system. The AI still requires guidance from humans on architecture, security, edge cases, and long-term maintainability.

AI will not replace Full-Stack App developers, but the developers who know how to use AI effectively will replace those who do not. The skill set is shifting from typing code to directing, reviewing, and steering AI-generated outputs.

No. AI accelerates repetitive work, but developers are needed for validation, system design, architecture decisions, governance, and long-term ownership.