The resources on how to scale an application are abundant but every application has its challenges to scale successfully, and the solution to this problem doesn’t come handy. This paper is focussed, on the best approaches, to build a Scalable application, applicable anyways if it’s a Modern Web Application, SaaS application, Mobile app, WordPress, Drupal or a Magento based website. The scope of the approach discussed extends to both, auto scaling and manual scaling.

In my experience, there are two types of applications, the ones with an admin CMS who modifies the code (WordPress, Joomla, Drupal, etc), and the Modern Next Generation Applications which are stateless apps following the 12-factor app approach. When I describe apps with an admin or CMS, I mean all apps which require using an Administrator panel to modify the layout and functionality of the application ( WordPress /wp-admin, page.com/administrator – Drupal, Magento, etc).

Taking a step back – To begin with, we have to decide first the approach of whether to go with Auto Scaling or Manual Scaling? Well, It is an important decision to make in the very beginning as it changes the whole approach and complexity of building your app. We will be discussing both but in essence of Auto scaling. You will need to consider how to maintain the code synchronized across the web nodes AND how to keep new web-nodes that are continuously being created/deleted in the Auto Scaling Group Though, with stateless apps it is not hard. As you may know, the images/static content can go to Amazon S3, CDN or isolated services – later on, will explain a bit about these technologies. On the other hand, working with Manual scaling will facilitate your job, you just need your code synced across all the web nodes. All of the approaches mentioned below apply to Manual scaling.

Moreover, an AutoScaling with a CMS application is a “headache” for some common reasons. Every time a front-end developer changes something in the CMS, the code will be inconsistent across multiple web nodes along with the Git versioning – Concerned with this issue. I don’t recommend the use of GIT for such Applications because there are always discrepancies between the last commit/version in Git and the last update in the admin CMS, Which makes AutoScaling with admin even the more complicated.

Let’s cover some approaches to solve this problem. As is known using an NFS distributed file system will resolve everything for a CMS app, but as everybody knows, this approach pulls down the performance.

Now What’s Important for Scaling:

- How to Deploy code flawlessly: it is crucial to design a deployment workflow which is easy for your team, your manager and developers – that’s why you are here, to improve development productivity by defining a suitable mechanism to deploy code to your cluster or Auto Scaling group. Here, you can think of Webhooks, Rsync CronJob, bash scripts, Jenkins, CircleCI, DeployBot, manual pulls or AWS CodeDeploy.

- Isolate and Centralize: Yes, all services need to be isolated and centralized. Databases, caching session, integrate s3 for static content, implement CDN, worker servers, cronjobs, Load Balancer, app server, web server, etc… to name a few.

- How to dynamically synchronize code in a Live application with multiple web nodes behind a Load Balancer or AWS AutoScaling Group.

- Able to use the 12-factor approach for the Next-generation Applications also called “Stateless apps.” It’s a mindset and a way of life for developers building modern, highly scalable, resilient applications. Probably you may be using this approach, but you don’t know it! – http://12factor.net/

- Bootstrapping your application with a stateless approach, this will facilitate all your work when you implement a scalable infrastructure. Bootstrap is a new term that you need to get familiar with. Similarly, there are many ways to bootstrap your base application using bash scripts, docker files, vagrant file, AWS code deploy, git, user data file from AWS, baked AWS AMI and among others.

- Immutable Servers in your environment. Make sure your application and server are in a pre-configured image state. Every moment new server/instance is created in an Auto Scaling mechanism, the instance should be identically cloned of any other instance in production without any further configuration. As you know AWS, Docker, Google Cloud, and Cloud-based providers are much easier for Immutability. This helps to deliver software faster and decrease time to market.

- For CMS based apps, stay out of git for now. Unless you have an excellent code change control with your CMS Admin and a good discipline to push your changes with every dashboard/backend change in the CMS – you think that’s you? Alright, keep it.

- Finally, you need a load balancer for Auto Scaling. Ex. AWS ELB, Google Cloud LB, Nginx or HAProxy.

Advised Practices for code storage

Understanding of how your application works regarding how visitors dynamically change your application data is crucial. It’s Imperative to understand how the session is saved, either locally or in Redis / Memcached, If it’s saved locally you need to add this data to your synchronization mechanism.

Another config change is the user image uploads for WordPress or Magento like CMS based apps, use of plug-ins like Wp super cache or w3 total cache makes image upload configuration easy, plus the option to integrate with a CDN, Amazon s3 or a cloud storage pushes the boundaries even further. But if you have a modern application you need to understand and guide your development team how to change local image uploads path to a cloud storage or CDN. Make sure all your dynamic data is saved in a SQL or NoSQL database, and all cached database data (DB queries, objects, sessions, keys, etc) is saved to Redis/Memcached, taking care of these factors will facilitate your scaling journey.

Why NOT use NFS?

Well, we hear a lot around the internet that the easiest way to achieve scalability is integrating NFS. Though it is the simplest way to look for and implement a fix; but it’s a real meaning sacrifice application performance?. Perhaps it reduces the work for a DevOps by almost 50%. It is very irritating for a client that when its application speed is between 2-3 seconds, but switching to a scalable environment with NFS will slower the application speed at least 50%.

It’s very disappointing when your small EC2 instance is much faster than your Auto Scalable environment with NFS. Additionally using NFS has a point of failure, unless you have a failover which is not very well used lately in the Cloud. Why? – It is just not worthy to have a complete standby environment waiting for a failure, and it better uses this environment within your cluster and has more resources to consume your traffic or even better use this redundant environment to balance between different regions or data centers.

Why not use Amazon S3 to share code?

So, what about using Amazon S3, Google Cloud Files or any Cloud Storage to share code? Implementing Amazon S3 to share code between nodes will slow down your site similar to NFS., There’s not much of a difference accessing the S3 URL to Mounting the S3 to a Linux directory, so don’t use Amazon S3 as the Document root or code base folder. Keep the use of S3 limited to static content that needs to be fetched over time. Making an argument, yes, there are some websites that can survive and work decently using Amazon S3 for static web pages, But from my experience, I don’t recommend it for websites that support an application responsible for driving revenue to the company.

AutoScaling for the next generation applications

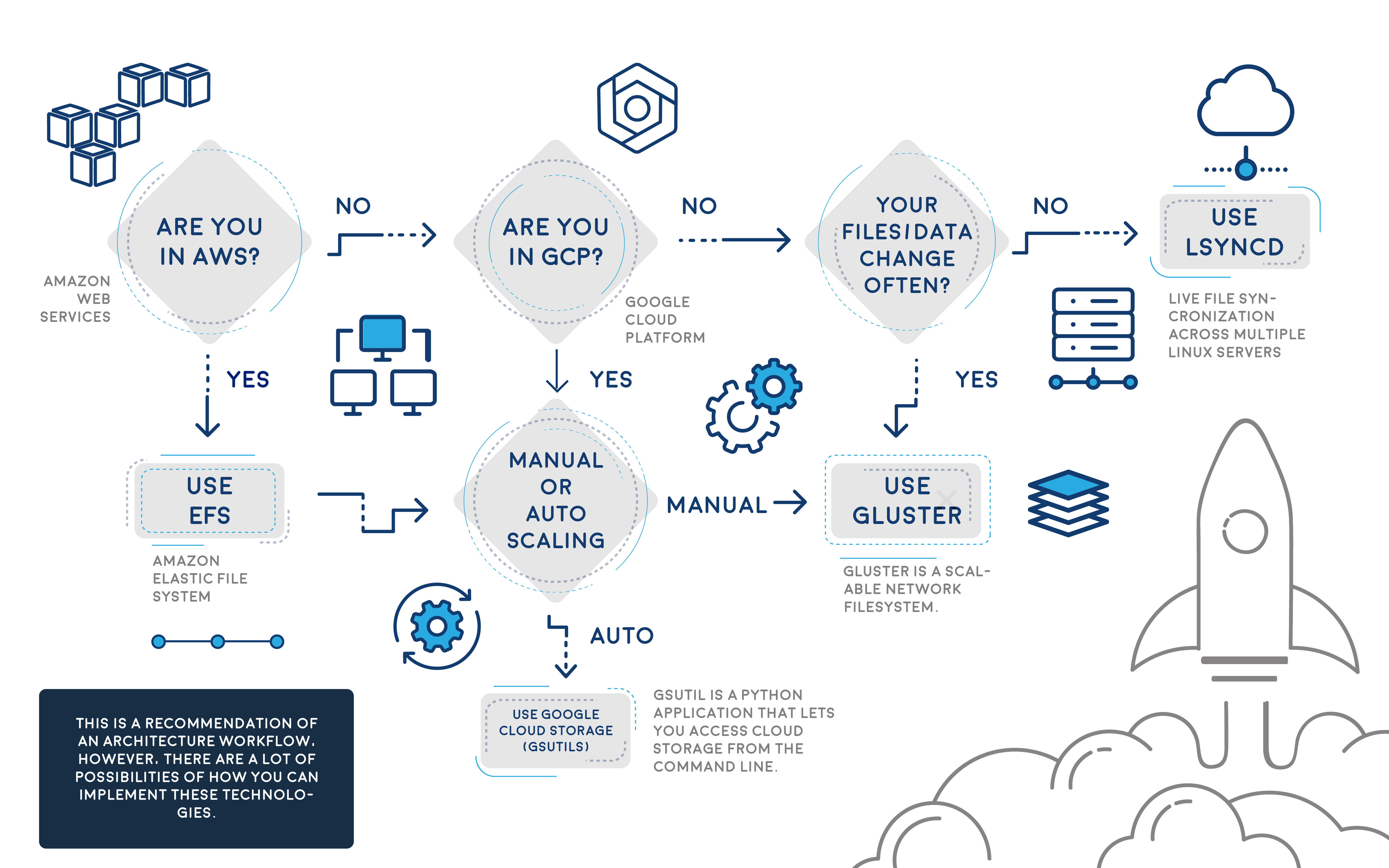

There are a few good looking Cloud hosting service providers; they are: AWS, Google Cloud Platform (GCP) and Microsoft Azure. Therefore if you are going to Auto Scale, you need to be under mentioned cloud hosting services. There are other Cloud hosting service providers in my knowledge who follow a similar approach, but we will not cover them since they are not widely used or are PaaS ecosystems, but these PaaS providers use similar methods mentioned below behind the scene.

Another consideration for AutoScaling is that all app nodes are changing, are created dynamically on a new host, new IP, etc. So your synchronization mechanism needs to think how to update the code with the new nodes which may change/deleted later.

Options to Auto Scale and not die trying

- Lsyncd: Given the fact that your require Auto Scaling, Lsyncd allows you to synchronize nodes across the cluster that are spinning up/down new instances, Working Lsyncd you need to work using this approach – https://github.com/zynesis/lsyncd-aws-autoscaling – This script monitors the auto scaling group for new nodes and updates the Lsyncd master with new Auto scaled slaves. Discovering which IPs or instances are within the Auto Scaling group is the preamble that auto scaling faces, but with this script will help resolve the discovery. Deploying Lsyncd with Manual scaling is very simple, can be used.

- GlusterFS: Another approach is to use GlusterFS which ensures high availability and sync between your web nodes; you need minimum two instances which if one fails, the other one jumps and still tolerant for failures and once is back it’s added again to the cluster, Glusterfs works similar to master/master or master/slave. I won’t cover how to configure GlusterFS, but I’m sure this approach was a great option before AWS EFS was released, but if you are not using AWS, this is an awesome choice.

In the other hand AWS environments with EFS, consider registering your web node to the master node using “AWS User data,” so every time the ASG creates a new EC2 instance, it will register this new volume to the master and keep data synced with the rest of the nodes.

Pre-Baked AWS AMI’s (old approach)

Given the fact that your code won’t change or barely changes, baking an AWS AMI and updating the AutoScaling launch configuration will do the trick, it is a little old but still used approach. When you have a defined Code release schedule, a defined development workflow, you could bake an AMI for every new code release, once ready recycle the nodes to use the new AMI with the latest code update. There are plenty of tutorials about this method.

Admin Server (mainly used for WordPress, Drupal, Magento and CMS based apps)

Another wild approach is to use an Admin or Master server out of your Auto Scaling group. This is used commonly to Sync code from the master to the Scaling Group, but I believe this a waste of server resources which can be used inside the Auto Scaling Group. I would recommend this approach for WordPress or Magento sites, in addition to this consider to proxy the wp-admin or administrator URL to the Admin server or update the CMS directly to the admin server. Same case here with NFS, AWS EFS will facilitate everything with WordPress, Magento, Joomla and CMS based apps. Here is what I’m talking about, URL: http://harish11g.blogspot.mx/2012/01/scaling-wordpress-aws-amazon-ec2-high.html

Implement AWS EFS

Finally, the easiest approach is AWS Elastic File System – EFS. Amazon Web Services released EFS a few months ago as a very promising technology, which is a distributed file system similar to NFS but improved, thinks as a sophisticated NAS solution. Discussing one time in the AWS Re:invent, they stated that EFS is based on NFSv4.1 but with better network performance, It was also said at the time that it is a pNFS workaround. I have waited for such a component in the arsenal of AWS for months, though early access was only available for Virginia and now is supported across all AWS regions – Yay!

EFS can be mounted as a local mount point within fstab, similar to NFS and share your code across your AWS AutoScaling group; you can set your EFS volume in /etc/fstab to load automatically after each reboot. Therefore, If you are in AWS, please use EFS, it will facilitate everything – really. To accomplish this approach, do not miss to update your user data in each EC2 instance with the EFS mount point, so every time a new instance is created it automatically will have the EFS volume.

Note 1: All EFS mount points should be in the same region, if an EC2 instance tries to mount from another EFS region, it won’t be allowed.

More Details: http://www.julianwraith.com/2016/07/aws-elastic-file-system/

Google Cloud Platform

Well, what do we have for Google Cloud Platform? I suggest using GlusterFS, Lsyncd, Rsync or Google Cloud Storage, though it shares a lot of features with Amazon S3 is faster. Google states that its Cloud storage is faster than Amazon S3 due to their infinite edge points across the world and yes they are correct. The easiest way to share the code across your auto scaling group is to use Google Cloud Storage and GSutils.

Additionally, you could use GSutils and call the bucket in each Google Instance, just make sure the visitor/user does not change the code or static content in the instances. Also, Gsutils helps sync the bucket data to your instance data to keep in sync. If your code changes dynamically with your traffic, you need to use Gluster o Lsyncd, given that it is very complicated to deploy since Google Auto scaling is always recycling instances/ips and need some workarounds.

Not to forget Scaling databases!

Another topic that we didn’t touch was Scaling a database, either for AWS RDS, DynamoDB, Postgresql, Mysql and NoSQL databases. All these modern databases are easy to scale, some of them are Platform as a service – PaaS such as DynamoDB or RDS. For Postgresql, Mysql exists only manual scaling, which is adding new Database nodes to the master/slave Cluster. In AWS RDS with some clicks, you can get a slave “replica” and you are scaling already. Anyhow, I won’t go further about it as it’s out of scope for this piece.

Scaling Microservices and serverless apps.

These topics need another talk; there will be another time to explore this new architecture approach which splitting your application into pieces as micro-services is changing the IT paradigm. To survive for this new paradigm, you need to understand my paper, then in the future evolve to Microservices with Docker, Consul, Compose, RancherOS, AWS ECS, Kubernetes and Serverless apps architectures. My next post will be related to scaling applications with Containers.

Conclusion

As you can see, scaling an application can be challenging when you are dealing with a custom app or CMS-based application. With this article, you have the options and their pros and cons in tackling this constant preamble of how to scale with custom apps.

Everybody uses their own scaling process and seriously, they never fit with other applications’ needs. Now that AWS EFS is out there, I’d recommend it every time you can, but in case you are not using AWS, implement Lsyncd or GlusterFS, but don’t forget the other methods that also can help accomplish your scaling goals.

We also have part 1 and part 2 of this blog for you, we recommend you read them.